Biologically Motivated Systems

Abstract

Recently, the computer software society is becoming aware that the traditional programming solutions are increasing in complexity day by day. To solve a highly complex problem, a highly complex solution must be also developed. The chance of an error being generated is very high; and moreover complex programs tends to crash more often and if the code is changed to rectify the error, another error may emerge. The maintenance and upgrade of the code is very costly and can often cause a company a fortune. Therefore many fortune 500 companies are searching to more efficient solutions for economic and chronological reasons. They have recognized that solutions to problems encountered in real life in the modern society are not conceivable even by the intelligence of human beings. A higher intelligence is required. But us there a higher intelligence than human beings? Yes There is. Although no individual entity in a species is capable of displaying intelligence as high as humans, groups of animals often exhibit behavior that are highly intelligent than the individual mettle., and that too in orders of magnitude, when it comes to solving a particular problem. The intelligence emerging from such groups are known as Swarm Intelligence. Swarm Intelligence often exceed the human intelligence by many times. Therefore, it seems reasonable to leave the complex and huge tasks to such intelligence and have them solved. Setting simple rules and making the swarm elements interact with each other with result in the solution of the problem leads to the success of a biologically motivated system.

Contents

Abstract

Contents

Acknowledgements

Introduction

1 Emergent Behavior

1.1 Strategy

1.2 Stigmetry

1.3 G-Type/P-Type Distinction and Emergent Behavior

1.4 Examples of Emergent behavior

1.4.1 Flocking

2 Self-organization

3 Swarm Intelligence

3.1 Communication

3.2 Cooperation

3.3 Army ant behavior

3.4 Termite construction

3.5 Swarm taxonomy

4 Behavior model approach to multiple robot systems

4.1 Basic behaviors

4.2 Combining basic behaviors

5 Issues with multiple robot systems

5.1 Cooperation through communication

5.2 Specialization amongst agents

6 Modular reconfigurable robotics

7 Predator-Prey model

8 Applications

Conclusion

References

INTRODUCTION

Nature has been in the constant process of evolving better and better solutions for creating more and more intelligent and adaptive animals through various methods. These methods have attained perfection in the course of millions of years, which if they are replicated into computer systems, can result in very efficient algorithms. The use of examples from biology is a well trodden path to understanding the behavior of complex systems. The natural world is after all the global ecosystem in which we and all other organisms live out our lives and contains species which are either in competition or collaboration or symbiotic in their relationships. So it is natural for us to look for similarities between the way animal communities live and computer programs. We can use biological system as metaphors or analogies. If the metaphor seems to be working in pointing up similar features we test it as a metaphor for explaining the possible relationships that exist. Taken farther we may even build computer models which simulate to some degree actual behavior. But there is a limit. True complex systems are evolving systems and as such are neither predictable nor can be expected to repeat their history in any exact way. Groups of animals often exhibit behavior that are highly intelligent than the individual mettle. These behaviors are called group behavior. For example are swarming of bees, flocking of birds, herding of cattle, schooling of fish etc. The intelligence emerging from such groups are known as Swarm Intelligence. Swarm Intelligence often exceed the human intelligence by many times. Therefore, it seems reasonable to leave the complex and huge tasks to such intelligence and have them solved. But the tricky part is the incorporation of thesethat algorithms into the computer. Setting simple rules and making the swarm elements interact with each other with result in the solution of the problem leads to the success of a biologically motivated system.

1.

Emergent Behavior

The interaction of a group of agents, even simple ones, may lead to interesting emergent collective behaviors at the group level. Common examples are given by social insects—ants, termites, bees and wasps—and by swarming, flocking, herding, and shoaling phenomena in groups of vertebrates. The abilities of such systems appear to transcend the abilities of the constituent individual agents. In most biological cases studied so far, the robust and capable high level group behavior has been found to be mediated by nothing more than a small set of simple low level interactions between individuals, and between individuals and the environment. The swarm intelligence approach emphasizes distributedness and exploitation of direct (agent-to agent) or indirect (via the environment) local interactions among relatively simple agents. The main advantages of the application of the swarm are three-fold:

1

Scalability

The control architecture is kept exactly the same from a few units to thousands of units.

2

Flexibility

Units can be dynamically added or removed, they can be given the ability to reallocate and redistribute themselves in a self organized way.

3

Robustness

The resulting collective system is robust not only through unit redundancy but also through the unit minimalist design.

In the last few

years, the swarm intelligence control

principles have been successfully applied

to a series

of case studies in collective robotics: aggregation and segregation,

beacon and

odor localization, collaborative mapping, collaborative transportation,

work

division and task allocation, flocking and foraging. All these tasks

have been

performed using groups of simple, autonomous robots or embodied

simulated

agents, exploiting local communication forms among teammates (implicit,

through

the environment, or explicit, wireless communication), and fully

distributed

control.

Many animal social organizations display the characteristics of complex systems in which the dynamic interaction of individuals both with each other and with the environment give rise to emergent and often stable patterns on a macro level. Human organizations consist of highly autonomous individuals, but we can gain some insight into why they have the characteristics they do by looking at the behavior of animals. Why, for example, do birds fly in particular formation, or bees swarm or ant colonies show a particular division of labor? In an ant colony there may be 'stingers' and 'foragers'. When there is numerous prey there are more stingers than foragers. When the prey is thin on the ground there are more foragers. Stingers and foragers have different 'rules' of behavior but the number of stingers and foragers in the community varies according to its needs. Interactions between individuals lead to observed patterns of activity.

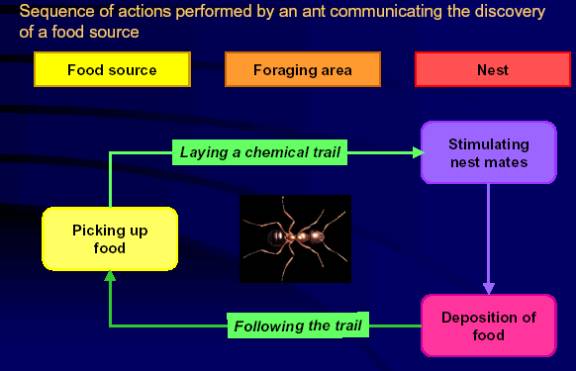

- 'Rule' one for a foraging ant might be 'find food and take it to the nest but leave a chemical trail'.

- 'Rule' two might be 'if you’re wandering about and you come across a trail then follow it'.

- 'Rule three might be 'if you're not carrying food and you haven't come across a trail then wander randomly until you find one'.

Put these rules together for an ant colony and you get a particular kind of emergent behavior. Models based on it offer a way to solve what is known as the 'traveling salesman problem' which involves finding the shortest route to service a number of randomly scattered clients. If robot ants are programmed to behave as described above the shortest chemical trail routes to food will be used by more and more ants. This is because those ants which use the shortest routes will get back to the nest and get out again sooner than the others.

The observed feature is that there is a greater density of ants on the shortest routes to food sources. The immune system is a useful example in understanding how organizations adapt to the environment. Antigens can be taken as the environmental condition that leukocytes react to by producing antibodies. Suppose we have autonomous robots that are programmed to deal with objects in the environment in certain ways. Each individual robot 'experiences' its own particular portion of the environment but in doing so at the same time feeds back information to other robots. This activity builds up a collective history and a collective 'strategy' which is constantly being modified depending on how appropriate it is to the strategy of each individual robot. In working from such examples we conclude that local responsiveness to environmental stimuli is important in gaining a collective strategy for dealing with change. Low level coordinated individual responses give emergent patterns to the whole and simple rules lead to complex behavior.

Figure 1

Foraging algorithm for ants

Such a system gains a 'robustness' which is not dependent on any single individual. If a particular individual robot becomes defunct another can always take its place. Valuable insights can be gained by building such models, though there is at present no mathematical theory that is sophisticated enough to describe them, let alone the kind of patterns that might emerge in social organizations in which there is a high degree of autonomy such as those of the human kind.

1.1

Strategies

Simple strategies

for coordinating action

·

parallelism

·

parallelism

plus interaction through the shared environment

·

control by

location in an inhomogeneous environment

·

differential

attraction recruitment and competition

·

direct and

indirect communication

·

less internal

state helps

·

differential

retention

·

foraging for

work

·

less internal

state helps

·

stigmetry

1.2

Stigmetry

Stigmetry is a concept occasionally used in biology to describe the influence on behavior of the persisting environmental effects of previous behavior. It was originally proposed by P.P. Grassé to explain some of his observations on termite building behavior. P.P. Grassé had observed that worker termites in the presence of particular configurations of a construction (and of other workers) would be stimulated to a high degree of activity, and would tend to add building material to specific parts of the construction. As the construction was changed by these additions, the site of addition of further material would be modified, leading to the progressive growth and completion of the feature; the termites would then switch to constructing another such feature, or would begin a new task apparently triggered by the presence of the completed feature. The phrasing of his introduction of the term is worth noting:

“The coordination of tasks and the regulation of constructions does not depend directly on the workers, but on the constructions themselves. The worker does not direct his work, but is guided by it. It is to this special form of stimulation that we give the name STIGMETRY.

In the English summary, the concept is expressed more directly:

“The stimulation of the workers by the very performances they have achieved is a significant one inducing accurate and adaptable response, and has been named stigmetry.”

If stigmetry is indeed at the root of the building behavior of termites, ants, bees, wasps, and other social insects, then it is certainly a powerful principle, as social insect constructions are remarkable for their complexity, size, and adaptive value. However, it is possible to extend the idea easily to other domains; it can then be seen as an even more impressive and general account of how simple systems can produce a wide range of apparently highly organized and coordinated behaviors and behavioral outcomes, simply by exploiting the influence of the environment. In P.P. Grassé's vision, a worker deposits a piece of building material (does 'work') in a particular location; this changes the sensory input subsequently obtained at that location, and hence may change the behavior produced (and the work done) at that location in the future. If a drop of pheromone was deposited instead, it could also change the behavior at that location in the future, at least until such time as it had completely evaporated. The laying and sensing of pheromones, especially in the form of chemical trails, underlies many of the spectacular abilities of ants, especially in the control of foraging, and is clearly an instance of P.P. Grassé's concept; the modern practice is to extend the definition of stigmetry by replacing the sense of 'work' with the sense of 'any environmental change produced by the animal'. (In fact the regulation of termite building behavior is now understood to involve pheromones as well as constructional features.)

Although there have been several modern treatments of stigmetry as a general phenomenon, there is still room for more precision in its definition. For instance, P.P. Grassé's original sense of 'stimulation' should formally be refined. All that is necessary for stigmetry to occur is for the outcome of the behavior of the relevant agent to be appropriately affected by previous environmental changes, and this can happen in a number of distinct ways:

(i) The agent's choice of action may be affected (a qualitative effect)

(ii) The selected action may be unchanged, but the exact position, strength, frequency, latency, duration, or any other parameter of the action may be affected (a quantitative effect)

However, there is also a third possibility which is not included in P.P. Grassé's formulation:

(iii) A previous action at a location might affect neither the choice nor the parameters of a subsequent action, but only the outcome (a qualitative and/or quantitative effect)

This requires some explanation. Consider a car being driven along a muddy track. Although the driver might try to steer a particular course, the wheels may settle into deep ruts which take the car along another course. The actions taken by previous drivers have affected the outcome of the actions taken by the present driver. (Incidentally, he will have

further deepened

the ruts, and will have a still harder

time of it the next time.) This influence may be thought of as passive

stigmetry

whereas (i) and (ii) may be thought of as active in that they affect

the agent

itself. Passive stigmetry is very close to purely physical situations

where

some constantly acting force - often a fluid - changes the environment

in such

a way as to change its future effect on the environment; for example,

the

formation of sand dunes, river deltas, and meandering rivers are all

instances

of this. We are now in a position to ask how stigmetry can actually

produce

complex patterns, whether of material or behaviors. Stigmetry is

essentially a

mechanism which allows an environment to structure itself through the

activities of agents within the environment: the state of the

environment, and

the current distribution of agents within it, determines how the

environment

and the distribution of agents will change in the future. As has been

made

clear by Bonabeau et al, any structure emerging from this repeated

interaction

develops by a process of self organization (SO).

There are several other comments which may usefully be made about stigmetry, and which can increase our understanding. One approach to stigmetry is simply to consider the minimal qualities of agent and environment which are necessary to support it. An agent has two key abilities:

- it can move through the environment

- it can act on the environment.

The environment must be able to be changed locally by agents; and such changes must persist long enough to affect the choice, parameters, or consequences of agents' behavior. (This effectively rules out stigmetry in empty or highly dynamic environments, such as space, air, and water.)

Change can be reduced to a small number of categories: material can be taken from the environment, or added to it, or some local quality of the environment can be altered. The scope of stigmetry is thus defined: the three types of environmental change may produce the three types of stigmergic action on the two agent abilities. It should be clear that some form of stigmetry must inevitably be on operation in many biological systems, and can be expected to occur in many artificial systems when they are widely deployed in the real world. However, only those instances of stigmetry which give rise to SO will produce noticeable or useful effects Some additional clues to the origins and underlying principles of stigmetry can be gathered from the observation that, as P.P. Grassé pointed out, there are two ways of structuring the generation of behavioral sequences in insects (and, by extension, in simple agents of any type). In the first, found in solitary species such as the digger wasp, the execution of the first movement in a sequence sets some internal state which then, often in conjunction with some appropriate external cue, initiates the second movement, and so on. In the second, found in both solitary and social insects, there is no such internal state; the external cue alone is sufficient. The second method often requires that the external cue is correlated with the successful completion of the first movement. This second strategy is more appropriate for social insects, for many reasons; more importantly, it sets the scene for stigmetry. Because there are many identical agents available, there is no longer any requirement that a connected sequence of movements (or sub-tasks making up a task) must be carried out by a single agent. The presence of the cues alone will ensure that a complete sequence is executed, even if each movement is performed by a different agent. (Where there is no suitable cue available from the end state of the sub-task itself, it may be necessary to augment the sub-task to provide some additional external cue, or sign.) In particular, where there are many similar cues for a certain sub-task at a given location, the rate of performance of the sub-task will be a function of the number of agents at that location. (This would not necessarily be the case if an agent had to be in a particular internal state in order to be able to respond to the rescue.) If there are many locations with such cues, the sub-task will be performed fastest at the locations which have the greatest numbers of agents present. Stigmetry can thus control the morphogenetic development of a construction or other spatial pattern by controlling the distribution of agents within the environment rather than just by controlling the elicitation of building actions at particular sites. Some constraints placed on stigmergic construction algorithms which do not control agent distribution were identified in a computer simulation of a task inspired by the building behavior of wasps.

Some of the most useful insights into stigmetry have been provided by simulations. For example, in their paper on 'The dynamics of collective sorting: robot-like ants and ant-like robots',

Deneubourg et al presented a simulation showing that simple agents, specified in terms which could equally well apply to ants or robots, could use stigmetry to achieve two generic tasks known to be performed by ants, and to be of fundamental importance to them: the clustering of scattered objects of a single type and the grouping and sorting of objects of two different types. For sorting, the agents needed to be able to sense the local densities of the different types of brood items, which was achieved by using a short-term memory, and also needed to know the type of any brood item they were carrying. Clustering was the result of the mechanism operating on only a single type of item.

However, studies

using artificial physical agents (robots)

may be able to yield deeper insights, perhaps because they are embedded

in

real-world physics, and share its constraints and opportunities with

stigmergic

social insect systems. Beckers, Holland, and

Deneubourg were able to achieve clustering with an even

simpler algorithm, using physical robots which were unable to detect

whether or

not they were moving any objects, which had no memory, and which could

sense

the local density of objects only as being below or above a fixed

threshold.

The mechanism was thought to be a form of stigmetry, acting to produce

self-organization.

Small clusters were formed at first through the action of the threshold

mechanism; by random accretion, some became larger than others; as

larger

clusters were less likely to lose objects and more likely to gain them

than

smaller clusters, the eventual outcome was a single large cluster.

- an insect’s actions are

determined or influenced by the consequences of another insect’s

previous action

- this can be seen as a

form of indirect communication

- works best when actions

are not internally chained

Stigmetry may be

classified into the

following different categories:

1.1.1.

Active stigmetry affects the

action:

- cue-based stigmetry

(sematectonic

communication)

- sign-based stigmetry

(purely communicative,

often chemical)

1.1.2.

Passive stigmetry affects the consequences of the action

1.1.3.

Quantitative

or continuous stigmetry:

- the relevant stimulus is

a continuous

variable

- the effect may be to

modulate the action,

or to switch to another action

Example: termite

construction

1.1.4.

Qualitative

or discrete stigmetry:

- the relevant stimulus is

a discrete

variable

- the effect is almost

always to switch to

another action

Example: paper wasp nest

construction

1.3 G-Type/P-Type

Distinction and Emergent Behavior

“In the context of Artificial Life, we need to generalize the notions of genotype and phenotype, so that we may apply them in non-biological situations. The GTYPE, essentially, is the specification for a set of machines, while the PTYPE is the behavior that results as the machines interact with one another in the context of a specific environment. This is the bottom-up approach to the generation of behavior.” Langton, 1989, pp. 22-23] Langton’s definition of GTYPE and PTYPE is not so much a generalization of the genotype/phenotype distinction in biology, as it is a framework to conceptualize emergent behavior in Artificial Life. It states the requirement of a minimum of two levels of description for models of emergence. The first specifically describes the level of the rules of dynamics (e.g. laws of physics or cellular automata rules). The second is the description of whatever global behavior one decides to observe (e.g. self-organization, function, patterns, etc.). However, this distinction fails to generalize the biological genotype/phenotype distinction, since the genotype does not define the laws that allow the phenotype to self-organize (protein folding), those are simply the chemical laws of the constituents of proteins (amino acid chains). The genotype merely offers the initial conditions for such a process of self-organization, in this sense it can be seen more as data than as a program for a phenotype. This problem can be easily recognized when we realize that the biological genotype, depending on the level of description chosen, can be seen both as a GTYPE and as a PTYPE. The GTYPE of the genotype being the chemical rules of interaction between the components of nucleic acids, and the PTYPE being its self-organization into DNA strands and its subsequent utilization as a genetic information carrier. Likewise, the biological phenotype may also be granted a GTYPE and PTYPE description. The former being the rules of interaction of protein constituents, and the latter being the functions associated with the specific phenotype (e.g. catalytic behavior).

1.4

Examples

of

Emergent behavior

1.4.1

Flocking

Flocking occurs in nature and is exhibited by birds, fish and some insects. The sight of a migrating flock of birds is one we are all familiar with. This behavior is based on the principal that there is safety in numbers and the whole is more important than the parts.

A flock of birds gives the appearance of a larger entity and dissuades attackers. If a flock or swarm is attacked, the survivors can scatter and regroup at a safe distance. This scattering can confuse predators and prevent them from capturing more than one or two members of the flock.

Flocking

phenomena

- Rapid

directed movement of the whole flock

- Reactivity

to predators (flash expansion, fountain effect)

- Reactivity

to obstacles

- No

collisions between flock members

- Coalescing

and splitting of flocks

- Tolerant

of movement within the flock, loss or gain of flock members

- No

dedicated leader

- Different species can have different flocking characteristics – easy to recognize but not always easy to describe

- Turning

is not simultaneous

- A

wave of turning propagates through the flock from the site of the

disturbance (front, back, side)

- Each

bird appears to time its turn to fit in with the approaching wave – the

‘chorus line hypothesis’

- No

collisions ever observed

The three rules that implement flocking are:

1 Cohesion: Each boid shall steer to move toward the average position of local flock mates.

2 Alignment: The boids will align to the direction their neighbors are traveling.

3 Separation: All boids in the flock will maintain a separation distance from their siblings.

The algorithm runs as follows for each boid in the flock:

For each detectable neighbor or barrier

1 If that neighbor is a sibling (of the same color) then the target point is a weighted average of alignment and either attraction, or repulsion if the boid is too close.

2 Else if the neighbor is of another color or a Barrier, the target point is in the opposite direction to the other Bird or Barrier

3 When all the detectable boids have been accounted for, take a weighted average of the various target points.

4 Move towards this point.

Flocking

manages

group movement very well:

· Rapid directed

movement of the whole flock

· Reactivity to

predators (flash expansion, fountain effect)

· Reactivity to

obstacles

· No collisions

between flock members

· Coalescing and

splitting of flocks

· Tolerant of

movement within the flock, loss or gain of flock members

In

addition, the

form of the flock may bring benefits in energy savings.

2

Self-Organization

A self-organizing system is a system that tends to improve its performance in the course of time by making its elements better organized for achieving the goal. This formulations includes the special case in which the goal is to achieve a high degree of organization (order) of relevant entities from low degree of their organization (disorder, chaos).” [George Klir] We should start the study of self-organization and complex behavior with the thought that “Complexity exists, in some murky sense, in the eye of the beholder” [Horgan].Self-organizing systems as a case of goal-oriented systems that are capable, with no explicit outside help, of improving their performance while pursuing their goals, and which must be evaluated with some performance function. However, this goal (e.g. order, complex behavior) is established in relation to some observer interested in a particular behavior. This is the reason why the study of emergent, interesting, complex behavior is a tricky affair.

We can all agree on the simple local rules causing the emergent global behavior, but the latter is a more subjective affair since it is not explicitly programmed or described in physical terms. It is instead an observed behavior, relevant for some observer with some goal like understanding life or building some sort of computational engine. Kauffman calls for a new statistical mechanics to understand the behavior of self-organizing computational structures, in other words, new higher-level parameters (with physical analogues such as temperature) must be developed to understand self-organizing, emergent, behavior regarding some observer’s interest.

“Complexologists often employ ‘interesting’ as a synonym for ‘complex’. But what government agency would supply funds for research on a ‘unified theory of interesting things’?” [Horgan] What is usually referred to as self-organizing behavior is the spontaneous formation of well organized structures, patterns, or behaviors, from random initial conditions. The systems used to study this behavior are referred to as dynamical systems: state-determined systems. They possess a large number of elements or variables, and thus very large state spaces. However, when started with some initial conditions they tend to converge to small areas of this space (attractor basins) which can be interpreted as a form of self-organization.

The existence of attractors is identified with the dissipation of some form of energy (friction), therefore, like living systems, dissipative structures can only be maintained by a constant flux of energy through them, and are therefore not in equilibrium. These attractors may be chaotic in which case the emergent behavior becomes too disorganized to grasp (disorganized complexity), though still self-organizing since chaotic attractors tend to be restricted to small volumes of their state-space (e.g. chaotic in a small subset of dimensions of the statspace).

The behavior of interest is often found in the transition between order and chaos - edge of chaos - and classified as a kind of organized complexity [Weaver, 1948]. Another parallel to living systems here is that such dynamical structures are not devised to exhibit this behavior, they develop spontaneously from random initial conditions (note: not from special initial conditions). This behavior - many parts working together to achieve a higher order — is also known as synergetics. Since such formal dynamical systems are usually used to model real dynamical systems such as chemical networks of reactions, non-equilibrium thermodynamic behavior, or even mineral osmotic growths, the conclusion is that in nature, there is a tendency for spontaneous self-organization which is therefore universal. Further, only matter out of equilibrium (with dissipation) can achieve self-organization, which may be quite complex (strange attractors, etc.). This undeniable tendency for the spontaneous formation of complex physical patterns, is also frequently used to propose that life (an autonomous dissipative organization maintained through metabolism) is more general than usually accepted and that even mineral structures can be in this sense alive.

This process of self-organization is also often interpreted as the evolution of order from chaos. However, notice that this evolution is limited in its complexity level to the attractors dynamic systems converge to. A given dynamic system, unless its parameters are changed (structural perturbation), cannot escape its own attractor landscape and it is therefore constrained in its evolutionary potential. This limitation will become more apparent when we approach the problem of self-replication.

Bonabeau and his colleagues have provided a useful brief summary of the nature and properties of SO. They define and describe SO as "...a set of dynamical mechanisms whereby structures appear at the global level of a system from interactions among its lower-level components. The rules specifying the interactions among the system's constituent units are executed on the basis of purely local information, without reference to the global pattern, which is an emergent property of the system rather than a property imposed upon the system by an external ordering influence." They go on to identify four basic ingredients of SO, and three characteristic signatures. The ingredients are:

- positive feedback

- negative feedback

- the amplification of fluctuations and

- the presence of multiple interactions

The signatures are the creation of spatiotemporal structures in an initially homogeneous medium, the possible attainability of different stable states (multistability), and the existence of parametrically-determined bifurcations. The mechanism of stigmetry, combined with environmental physics, provides the basic ingredients in social insects; the resultant SO produces outcomes which display the characteristic signatures. Stigmergic SO is distinguished from the purely physical SO because it involves mobile agents. Agents can sense the local environment, and act on it, in ways determined by their physical and computational constituents. The possibilities for producing spatiotemporal structures both in the environment and in the distribution of agents within the environment are therefore infinitely greater than those arising directly from the environmental physics. It is this potential richness of behavior-mediated changes which has been exploited by evolution to produce the striking phenomena found in social insect colonies; Bonabeau et al have pointed out some of the possible ways in which evolution may favor the emergence of some aspects of self organization.

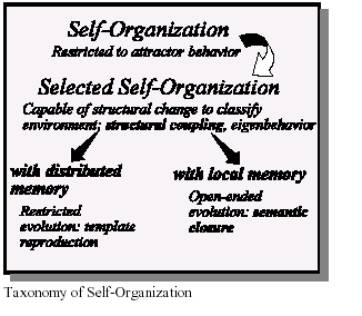

Figure 2

3

Swarm

Intelligence

Swarm Intelligence (SI) is a computational and behavioral metaphor for solving distributed problems that takes its inspiration from biological examples mainly provided by social insects. The abilities of such natural systems appear to transcend the abilities of the constituent individual agents. In most biological cases studied so far, the robust and capable high-level group behavior is mediated by nothing more than a small set of simple low-level interactions between individuals and between individuals and the environment.

Understanding how animals work is a problem of “reverse engineering”. Rather than building something with a certain functional capability, we have something that already functions and want to figure out how it works.

The application of engineering methodologies seems to be an appropriate and promising approach, though not easy to implement.

One of the aims of SI research is to exploit the advantages of using a group to achieve a task over using a single complex program to achieve the same task. In other cases, tasks exist which cannot be performed by a single program, necessitating an SI approach. A swarm may carry several benefits:

- Redundancy

Failures of individuals lead to a gradual, not catastrophic, failure of the system. Loss of an individual member of a swarm is not disastrous, whereas loss of a subsystem of a complex system usually results in catastrophic failure.

- Simplification

Individual agents may be simpler and cheaper.

- Time

Efficiency

Number of agents may achieve a task in a shorter time than a single agent. Maximizing the increase in efficiency is one of the main areas of investigation in this field.

- Resource

Efficiency

Limited resources may be allocated amongst members of a heterogeneous agent team in order to maximize the efficiency of the team.

Swarm Intelligence is characterized by the following properties:

3.1

Communication

For the swarm to have a useful task achieving behavior, the members must communicate.

Communication

is effected in the group through the following methods:

- Directed communication is communication aimed at a particular receiver or receivers.

- Indirect communication is communication based on the observation of other agents’ external states. Indirect communication can be by signposting, where robots broadcast their state by advertising it visually or in some localized way.

- Another method of indirect communication is by changing the local environment, so that when it is visited by other robots, the change is sensed. This is termed stigmetry, so-named by the French biologist P.P. P.P. Grassé during his investigations of termites, which leave chemical trails to aid nest building and foraging.

3.2

Cooperation

Cooperation is defined as an action by one agent which assists another agent in the achievement of its task. This involves communication of one of the types identified above.

Explicit cooperation is interaction which involves exchanging information between agents or performing actions to benefit another agent in achieving some task. That is, a type of altruistic behavior. Implicit cooperation also involves an exchange of information or performance of a task, but in this case it is a necessary part of the agent’s own behavior and is not explicitly intended to benefit another agent. Cases where such a benefit exists are examples of symbiosis.

The behaviors of agents in a robotic swarm must use cooperation to deal with interference which adversely impacts the swarm’s task achieving efficiency.

Multi-agent systems are subject to two types of interference; resource competition and goal competition. Resource competition arises when agents share and compete for resources such as space, energy, information or objects. Goal competition arises when agents in a heterogeneous group compete to achieve mutually incompatible goals or subgoals. Interference of both types must be reduced to decrease the adverse impact on the group’s efficiency. In the behavioral approach, this is achieved by building or evolving social behavior sets, leading to a reduction in global cost functions related to the achievement of the overall swarm’s task. This is often at the expense of increases in cost functions at the scale of individual agents, although social rules can equally lead to reductions in these cost functions, translating to increases in efficiency of individual agents. Multi-agent control strategies aim to exert control over individuals in the swarm to allow the swarm’s task to be achieved. Moreover, they partially or fully define the task itself. The control strategy may be controlled by imbuing certain agents in a heterogeneous swarm with planning abilities and designing in communication hierarchies to support information about the task and agents’ states reaching the planner agents. At the other extreme, the behavior of the swarm may arise as a result of individuals’ subtasks together producing an emergent global behavior.

An example of the working of the insect communities is given below:

3.3

Army ant behavior

- 500,000

to 20,000,000 per colony

- feed

on small arthropods

- 15

nomadic days: raid 200m in straight line, 20m raid front, 30,000 prey

items brought back, bivouac with 50,000 larvae moved every night

- 20

static days: 14 raids, each at 123 degrees to previous raid

- fission:

colony stages two raids in

- opposite

directions, queens run down raid line, one survives in each raid, each

colony becomes independent

3.4

Termite construction

- some

of largest and longest lasting nonhuman constructions

- not just mounds of earth: cemented together, highly structured and differentiated, many different functional aspects (ventilation ducts, cooling vents, royal chamber, brood chambers, food stores, fungus gardens, middens, water tunnels, etc

3.5

Swarm

taxonomy

Dudek et. al. [DuJe93] propose a taxonomy for organising different swarm models. The

distinguishing factors, along with their possible classifications:

- Swarm size

- Alone (1 unit),

- Pair (2 units),

- Limited group size with respect to task size,

- Large effectively infinite group size.

- Communication

range

- None,

- Communication between near agents,

- Infinite (able to communicate with any other agent).

- Communication topology

- Broadcast,

- Address,

- Tree,

- Graph,

- Other.

- Communication bandwidth

- High (communication cost is free),

- Motion (communication carries a cost related to the

- Distance between communicating agents),

- Low (communication cost is high).

- Swarm reconfigurability

- Static,

- Coordinated rearrangement (requires communication),

- Dynamic rearrangement (agents can redistribute

- Arbitrarily).

- Unit processing ability

- Non-linear summation unit (eg. Processing equivalent to a

- Single

artificial neuron),

- Finite

state automaton,

- Push-down automaton,

- Turing machine equivalent (general computation).

- Swarm composition

- Homogeneous,

- Heterogeneous.

A swarm composition is still classified as heterogeneous if its member agents are physically similar if the programming of the agents differs.

4

Behavior model approach to multiple

robot systems

Traditional approaches to the investigation of swarming systems would model the agents’ behaviors as rule-based or finite state machine (FSM) computations. By examining the efficiency with which the swarming system achieves its task, the behavior FSMs are redesigned by the researcher to improve efficiency. As this can be a fraught exercise, recently there has been a move toward allowing the behaviors or behavior selection mechanisms of individuals to evolve. This may be done by endowing them with genetic-algorithms whose outputs code for differing selections. The genetic code is then allowed to evolve according to some fitness measure. i.e. There has been a shift from a top-down to a bottom-up approach. This has been most evident within the Alife group; less-so amongst DAI researchers, revealing the differing interests of the groups.

However, the newer approach is often based on combining a subset of behaviors which have been identified using the traditional approach. Later work might then seek to evolve the behavior selection criteria to maximize some aspect of task achievement.

The work in this thesis is based on the traditional approach as the application of SR to accurate surveying has not been investigated before.

The behavior model upon which this thesis is based is due to Maja Mataric. This work grew out of the work of Rodney Brooks [Bro86]. Brooks’ approach to achieving useful, real-time behavior by a robot operating in a complex world is to decompose task solving into simple behaviors which are combined by a hierarchy of competing processing units.

Mataric built on this behavioral approach by applying it to the problem of interacting with multiple robots. She proposed a basic set of robot behaviors, which may be combined to generate more complex behaviors. These behaviors form a minimal set, the combination of whose members allow a complete implementation of interaction and navigation in a plane. The basic behaviors are called avoidance, following, aggregation, dispersion, homing and wandering.

A behavior is defined as an operator which guarantees a particular goal. The goals fall into two categories; maintenance goals or attainment goals. A maintenance goal is one which ensures that some time persistent task is being achieved or a dynamic equilibrium is being maintained. An attainment goal places the agent in a terminal state with respect to that behavior; once achieved, the behavior state enters a completed state.

An example of a maintenance goal is ‘maintain energy stores by collecting food’. An example of an attainment goal is ‘navigate to a goal’.

4.1

Basic behaviors

The basic behaviors, avoidance, following, aggregation, dispersion, homing and wandering may be described in formal notation.

4.2

Combining basic behaviors

Basic behaviors

must be combined in some way to form

useful composite behaviors. One example of a composite behavior is flocking.

The term defines a collective motion of agents toward a goal. It may be

realized as a combination of safe-wandering, aggregation and

dispersion.

By combining the flocking behavior with following, a

slightly

more complex composite behavior called herding is realized.

The combination of basic behaviors to form more complex composite behaviors may be performed in two ways; directly or temporally. Direct combination of behaviors allows all basic behaviors to simultaneously contribute to the behavior output.

Direct combination may be realized by summing direction and velocity vectors, which are the outputs of the basic behaviors. The flocking and herding behaviors are formed through direct combination of their basic constituent behaviors.

Temporal combination of basic behaviors to form more complex behaviors is performed

through sequencing by a finite state machine. Sensor data is used by the agent to define events which cause changes of state.

The composite behaviors possessed by the robot surveying team members in this investigation required only temporal combination of the safe-wandering and homing basic behaviors.

5

Issues

with

multiple robot systems

5.1

Cooperation through communication

The members of a robot swarm can use communication to improve the efficiency of some

global task. Communication thus provides a method for trading off improvement in the global cost function against local cost functions [Mat97]. If an improvement of the global cost function is measured, a problem of credit assignment arises. Reward must be distributed amongst the agents whose contributions to the global cost function vary. If the credit assignment problem can be solved, this reward may then be used by individual agents to affect behavior selection.

To solve the assignment of credit involves communication between agents. Aspects of the global task state can be sensed by individual agents and this information disseminated amongst the agents to build the global task cost function.

5.2

Specialization amongst agents

A Swarm Robotic (SR) system may be classified as homogeneous or heterogeneous, based on whether the agents comprising the system are identical or specialized, both physically and with respect to their behavior sets.

Homogeneity of agents has the following advantages:

A given number of identical agents may be able to be manufactured more cheaply than a

heterogeneous group.

Standardization in all aspects relating to the group, such as delivery and maintenance is simplified.

Redundancy is built into a homogeneous group. If individuals in the group fail, since the agents all have a common goal, this will be achieved by the remaining agents.

Heterogeneity has the following advantages:

Resources may be allocated to group members in a cost effective manner. Where agents subsystems are expensive, it is not sensible to provide these same subsystems to all members of a group.

Communication hierarchies may be established, often leading to a more natural system of swarm management.

6

Modular reconfigurable robotics

Modular reconfigurable robotics is a related approach to building robots for various complex tasks. Robots are built out of a number of identical simple modules. Each module contains a processing unit, a motor, sensors and the ability to attach to other modules. One module can’t do much by itself, but when many modules are connected together, the result may be a system capable of complex behaviors. A modular robot can even reconfigure itself—change its shape by moving its modules around—to meet the demands of different tasks or different working environments. For example, the PolyBot developed at the Palo Alto Research Center is capable to reconfigure itself for snake-like or spider-like locomotion. Self-reconfigurable robots have several advantages over traditional fixed-shape robots:

1 The modules can connect in many ways making it possible for a single robotic system to solve a range of tasks. This is useful in scenarios where is undesirable to build a special purpose robot for each task. The robot may even decompose itself in several smaller ones.

2 Self-reconfigurable robots can adapt to the environment and change shape as needed.

3 Since the robot is built out of many independent modules it can be robust to module failures. Defect modules can be ejected from the system and the robot may still perform its task.

4 Modules can be mass-produced and therefore the cost may be kept low .

These robots may have applications in rescue scenarios from collapsed buildings, where they may enter the rubble with snake-like locomotion and then reconfigure to support the weight of the rubble collapsed on the buried people. Their ability to serve as many tools at once, versatility and robustness recommend them for space applications, saving weight and being able to packing into compressed forms. They may also have various military applications. However, there currently exist a number of both hardware and software research challenges that have to be overcome in order for modular robots to be able to perform in such applications.

7

Predator-Prey model

All the above mentioned

algorithms are based on biological systems, but they lack one important

point-

the ability to focus on a particular goal. The Predator prey algorithm

was

developed with the intention to overcome this shortcoming. In the

predator prey

algorithm, the program bearing the role of the predator is focused on

only one

goal- to capture the prey. On the capture of each prey unit, a reward

in some

form will be awarded. All the actions of the predator are focused on

moving

towards the ultimate goal- the capture of the prey. The predator and

prey

population can be set to any arbitrary value, but soon will reach a

stable state.

A maximum can be set so that the prey population will not fall below a

specific

limit.

The Predator-Prey model tracks

prey and predator populations over time. You can vary prey population

growth

parameters to simulate environmental fluctuations.

Predator and prey population

levels tend to become constant over time over time.

Inputs

Predator:

Starting

predator population

Harvest efficiency

Conversion efficiency

Starvation rate in the absence

of prey

Functional response (prey

capture as a function of availability)

Prey:

Starting prey population

Maximum prey population

(carrying capacity)

Intrinsic rate of increase

Variability in carrying capacity

and intrinsic rate of increase Outputs

8

Applications

Self-organizing multiagent

societies may have interesting applications in services, industry and

defense.

·

vacuuming and

cleaning

·

assembly

·

surveillance

and transport

- The

“smart dust” concept implies sprinkling thousands of tiny wireless

sensors on a battlefield to monitor enemy movements without alerting

the enemy to their presence. By self-organizing into a sensor network,

smart dust would filter raw data for relevance before relaying only the

important findings to central command. The idea was launched by the

Pentagon in 1999 and has recently reached prototype stage16.

- Brooks

and Flynn has proposed the used of robotic swarms or space exploration.

- Foraging

algorithm in ants - packet transmission in

network

- Obstacle

avoidance in ants – efficient routing table construction over a network.

- Termite

mound building- for efficient task allocation and P2P searching of

files.

- Incorporating

complexity modeling-for breaking down complex structures into many

simple modules

- Automatic

complex task handling such as stock market share negotiation, weather

prediction etc

- Proving

of theorems and solutions to unsolvable problems in math’s and astronomy

- Medical

Applications

- Nanobots

– Nanometer size robots which can Even diffuse through the skin of the

patient which can be programmed according as to recognize specific

microorganisms, capture and destroy them; which can lead to cure of

various diseases like cancer, AIDS etc and any disease imaginable- it

can work as the second immune system of the body, only to be more

powerful and quick.

- Microimaging

–Instead of MRI scans and other methods which can be harmful to the

body, a fluid containing the nanobots can be injected into the body,

which can transmit its data over to the central computer which forms

the final image from the bits collected from individual bots

- The

concept of “swallowing a doctor to cure the disease” as mentioned in

Fennymans’ famous speech will become a reality. once the problem of

mass scale production and making the robots acceptable to the body is

overcome, which may become possible within

6-10 years.

Conclusion

The

realms of

application of biological algorithms are large and their scope extends

far and

wide. Military, Medicine, Space research and Biology etc are a few of

the

fields already benefiting from this approach largely. In future

efficient

methods may be found out by which the unit rules can be formulated

easily so

that the macro behavior can lead to a behavior which results in the

solution to

the problem at hand.

The field of nanotechnology is

growing at a rapid pace too. Once the problem of producing nanobots at

large

scale is perfected, these nanometer size robots can be programmed to

recognize

specific microorganisms, capture and destroy them; which can lead to

cure of

various diseases like cancer, AIDS etc

Surveillance vehicles with modular

architecture can be used in space research and remote exploration.

The swarm intelligence along

with the computing power of computers can be used to create highly

intelligent

systems which can solve complex problems which h human beings cannot

even

imagine to solve. Together, these algorithms bring a new era of

computing in

which thee philosophies of programming will be completely different.

References

Books

Prey - Micheal Crichton

Artificial Intelligence - Elaine Rich and Kevin Knight

Compact

Discs

Britannica CD

2.0, Encyclopedia

Britannica, 1995

Journals

“A Robust Layered Control - IEEE Journal of Robotics and Automation, Vol.RA-

System for a Mobile Robot”, 2 No.1, 1986 Brooks, R.

“A Robot that Walks; - Neural Computation, Brooks, R.

Emergent Behaviors from a

Carefully Evolved Network”

“A Taxonomy for Swarm - Proceedings of the 1993 IEEE/RSJ International

Robots”,

Conference

on Intelligent Robots and Systems,

Yokohama,

Japan

“From Local

Actions to

-

Artificial

Life IV: Proceedings of the

Global Tasks: Stigmetry and Fourth International Workshop on the Synthesis

Collective Robotics” and Simulation of Living Systems, MIT Press

Beckers, R., Holland, O.E. &

Deneubourg, J.L.

Websites

www.aaai.com - The Association of Artificial Intelligence

www.caltech.edu - The Caltech university homepage

www.csu.edu - The Washington university homepage