|

MONITOR

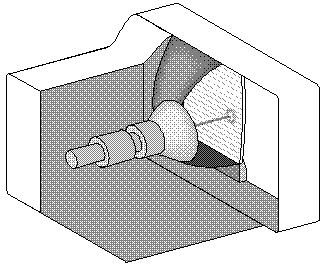

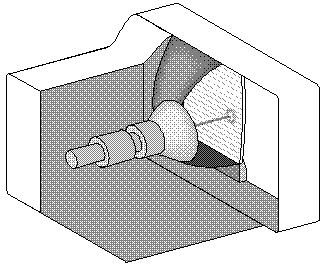

A computer screen or cathode ray

tube.

More Detailed Definition:

(1) Another term for display

screen. The term monitor, however, usually refers to the entire

box, whereas display screen can mean just the screen. In addition,

the term monitor often implies graphics capabilities.

There are many ways to classify monitors. The most basic is in

terms of color capabilities, which separates monitors into three

classes:

- monochrome : Monochrome monitors actually display two colors, one

for the background and one for the foreground. The colors can be

black and white, green and black, or amber and black.

- gray-scale : A gray-scale monitor is a special type of monochrome

monitor capable of displaying different shades of gray.

- color: Color monitors can display anywhere from 16 to over 1

million different colors. Color monitors are sometimes called RGB

monitors because they accept three separate signals -- red, green,

and blue.

After this classification, the most important aspect of a monitor

is its screen size. Like televisions, screen sizes are measured in

diagonal inches, the distance from one corner to the opposite

corner diagonally. A typical size for small VGA monitors is 14

inches. Monitors that are 16 or more inches diagonally are often

called full-page monitors. In addition to their size, monitors can

be either portrait (height greater than width) or landscape (width

greater than height). Larger landscape monitors can display two

full pages, side by side. The screen size is sometimes misleading

because there is always an area around the edge of the screen that

can't be used. Therefore, monitor manufacturers must now also

state the viewable area -- that is, the area of screen that is

actually used.

The resolution of a monitor indicates how densely packed the

pixels are. In general, the more pixels (often expressed in dots

per inch), the sharper the image. Most modern monitors can display

1024 by 768 pixels, the SVGA standard. Some high-end models can

display 1280 by 1024, or even 1600 by 1200.

Another common way of classifying monitors is in terms of the type

of signal they accept: analog or digital. Nearly all modern

monitors accept analog signals, which is required by the VGA, SVGA,

8514/A, and other high-resolution color standards.

A few monitors are fixed frequency, which means that they accept

input at only one frequency. Most monitors, however, are

multiscanning, which means that they automatically adjust

themselves to the frequency of the signals being sent to it. This

means that they can display images at different resolutions,

depending on the data being sent to them by the video adapters.

Other factors that determine a monitor's quality include the

following:

- bandwidth : The range of signal frequencies the monitor can

handle. This determines how much data it can process and therefore

how fast it can refresh at higher resolutions.

refresh rate: How many times per second the screen is refreshed

(redrawn). To avoid flickering, the refresh rate should be at

least 72 Hz.

- interlaced or noninterlaced: Interlacing is a technique that

enables a monitor to have more resolution, but it reduces the

monitor's reaction speed.

- dot pitch : The amount of space between each pixel. The smaller

the dot pitch, the sharper the image.

convergence : The clarity and sharpness of each pixel.

|