Go back to politics page.

Here are essays on the technological Singularity, and its economic and political consequences. My main politics essays, on the central themes I talk about, the interrelation of politics, economics and automation, are on my economics page. Essays on how to reform liberalism so liberals win elections again, along with some miscellaneous political essays, are on my politics page. Essays about rationalism and purely social political issues are on my rationalism page because they are about science vs. religion. My futurology page also has plenty of political overtones.

The Singularity, in which I try to introduce more people to the idea that we may have a tidal wave of technological change in store for us in the coming decades.

Economics of the Singularity, in which I show that the Singularity, if it happens, would be the most persuasive case for a political shift to the left.

(written in 2003 and updated since)

In my essays on my main politics page, I talked about automation a great deal. Here, I want to focus on how much automation we can expect in the coming decades, along with all other technological advance. The possibilities are mind-boggling. Yet the general public seems to have only the vaguest idea what could be in store for us.

An idea has been taking hold among a small but growing number of people in the last few decades, which most of them call the Singularity. (Some people have given it other names I've heard: the Breakthrough, the Spike, the Convergence, and "the technological tsunami".) The idea is that not only is technology advancing, and not only is the rate that it is advancing speeding up, as it has throughout history, but the rate that it is advancing is about to jump to far faster than ever, most likely in the next few decades, releasing an unprecedented tidal wave of technological advance and changes to daily life. While no one really knows how fast progress could speed up, just as a guess, we could see the sort of technological progress we've seen happen in a century happen every year!

The main reason for the Singularity is that computers are rapidly approaching human intelligence. Although the computers in daily life today have miniscule intelligence compared to people, the rate that they are increasing in power is so great that they should reach human intelligence in less than 2 decades.

There are 2 things necessary for computers to reach the power of the human brain. The first is the hardware to process as much information as quickly as the human brain can. The second is the software to instruct computers how to process that information in the same way that the human brain does.

First I'll talk about the hardware. Computers have been increasing in power and decreasing in price at an astonishing rate. Around 1970, Gordon Moore, head of Intel, a major computer company, formulated "Moore's Law", which stated that computer chips would continue to increase in power, with a doubling of transistors per chip every 2 years. What this meant was that for a given price, the processing power you could buy for the money has been continually doubling, initially every 2 years. But the pace continued to quicken, to every 18 months around the 1980's, and every 12 months between 1995 and 2005. Since then, progress has slowed as the transistors in computer chips have approached the molecular level in size.

Further progress will have to go in a new direction, to parellel processing, in which, instead of a single processor getting faster and faster, the task is split up among more and more processors. Parellel processing is how the brain achieves its awesome power, despite the fact that its processors, the neurons, and the circuitry between them, the axons and dendrites, work far slower than electronic circuitry. We are just at the beginnings of having parellel processing take off, so that the number of processors per computer, now usually no more than 2 in personal computers, keeps doubling every year or 2 the way individual processor power was doubling. The main problem now is figuring out how to write programs to take advantage of parellel processing. Computers will have to shift to working the same way that the brain works. Fortunately, the sort of problems that require massive processing power, the ones that the brain solves most easily, are just the ones that can be most easily split up into parellel tasks.

While there's no way to know how this will play out, let's assume that personal computers will switch to parellel processing, and will continue to double in power every 2 years on average. Since 10 doublings is just about a 1000-fold increase, that means that computers of a given price should be at least 1000 times more powerful 20 years from now than they are now (maybe even sooner, if the pace picks up up again once we have mastered programming for parellel processing.). Alternatively, computers of a given power should be 1/1000 the price they are now by 20 years from now. However, as computers using parellel processing work their way down to personal computers, the doubling rate every year may pick up again. Where is this all heading?

There is little doubt that computer hardware will reach and then exceed the power of the human brain. According to the best estimates of the processing power of the brain, in terms of a unit of processing called a "flop" (floating-point operations per second), the brain processes as much as 100 quadrillion (10 to the 17th power) flops (or 100 petaflops). However, it may only process as little as 100 trillion (10 to the 14th power) flops (100 teraflops) because of a great deal of redundancy in the way that the networks of neurons process information.

The world's most powerful supercomputer just about reached the bottom of that range of estimates in July 2004, and in June 2005, a new computer (IBM's "Blue Gene") became 3 times more powerful still. A more powerful version of Blue Gene, which is supposed to start being assembled around the time of this writing (autumn 2007), is supposed to operate at 10 to the 15th power flops in 2008, and later 3 times that speed. A still more powerful version that would operate at 10 to the 16th power flops is planned to go into operation by 2012. Supercomputers are already using parellel processing on a massive scale, so their rate of increase in power, a doubling every year, has not been decreasing, at least so far. They are being used for the sorts of problems that lend themselves to parellel processing, such as predicting the weather and emulating brains. Even if the upper estimate of how much power it would take to emulate a human brain is correct, computers would reach the human range by 2016. Most experts seem to think the upper number is no higher than 10 quadrillion (10 to the 16th power), so that they would reach the human range by 2012 (if they haven't already). So far, in fact, researchers have consistently been able to get computers to emulate functions of the brain with computing power closer to the lowest figure, 10 to the 14th.

The world's most powerful supercomputers cost about $100 million, however, and at that price, they would not be used to replace people even if we could get them to function like people, since very few employees cost that much to hire. Such computers are used for the kinds of tasks computers are best at and people are worst at. However, the price of computers of a given power will continue to fall. If the pace of Moore's Law continues, 10 years from when those $100 million supercomputers reach the processing power of the human brain, $100,000 computers, that ordinary businesses can afford, will reach that power, so around 2014 - 2022. Around 2022 - 2030, personal computers in the $1000 range will reach that power, as programs that can use parellel processing make their way down to personal computers. (After a certain point, various fixed costs, especially the price of the computer screen, prevent any further decreases in price, at least until less-expensive ways are found to make the screens. That has, in fact, been happening, but at a much slower pace than Moore's Law.)

The software is of course not as far along as the hardware, and it's much more difficult to convince people that we might have computers like HAL in the movie "2001: A Space Odyssey" anytime soon, that you would be able to talk to like a person, and that would be able to take over any job that humans now do. Most people now say, "Yeah, right, they've been talking about that for 50 years already, and it's always 20 years in the future". But that's not quite so.

A few computer scientists did predict human-level artificial intelligence (A. I.) in 10 or 20 years back in the late 1950s and early 1960s. When they were able to get computers to do the most sophisticated things humans can do, such as solving mathematical problems, they became over-optimistic that computers would soon be able to do everything. They should have wondered what all those billions of neurons and trillions of connections in the brain were doing if they were unnecessary, and if they were necessary, then how the computers back then, barely more powerful than pocket calculators, could emulate all of human behavior. It soon became apparent that some of the things that are very difficult for humans, such as mathematics, are easy for computers, but most things humans can do easily, such as understanding speech, making sense of the visual world, manipulating objects and walking around without bumping into things, computers could not do at all. In fact, since hopes for immanent A. I. were dashed in the 1960s, the standard answer for when human-level A. I. would be developed stayed at around 2030, when it was projected that computer information processing power would reach the power of the human brain. But Moore's Law has greatly sped up, and as I said above, supercomputers are already at about the power of the human brain, 20 or 25 years early. It will take some time before artificial intelligence researchers create true artificial intelligence, now that they are just starting to have computers with close to human-level power to play with, so virtually no one thinks that true A. I. is just about to come into existence. However, many experts' estimates have moved earlier in the past decade, to 2020 or even sooner. Meanwhile time has marched on, and 2020 or sooner is not so very far away. People tend to think straight-line, that the pace of change will stay the same, when in reality, progress on new technologies tends to be exponential, at first going very slowly, then exploding. Technologies tend to take longer to develop than expected, but once developed, they tend to burst upon the scene faster than expected. Just when most people give up hope that technologies will ever come to pass, that's when they do.

And in case people haven't noticed, some progress has been made toward true A. I. in the past decade, as computers (especially the ones A. I. researchers get to work with, as funding has started to rush back into the field now that it shows promise again) have gone much beyond the power of pocket calculators, and then beyond the power of insect brains, all the way up to the level of the brains of fish and beyond. A few of the things computers could not do before, they are starting to do. We now have practical computer voice recognition, and increasing computer visual perception. Most people have by now encountered computer voice recognition when calling major companies such as airlines and banks. There are also computer dictation programs on the market, that save people from typing. There is software that recognizes people's faces, and there has been political debate whether to install cameras in public places such as airports to spot potential terrorists or other criminals. Language translation devices are becoming practical. The US military is beginning to use them in Iraq because of the shortage of Arabic translators. An article in the July 10, 2006 Miami Herald said that the devices, called Iraqcomms, work by having a person speak English into a microphone, and the device then says the same thing out loud in Arabic, or vice versa.

There have been additional advances that most people don't know about. For example, since the early 1990s, there has been research into computers driving cars -- the ultimate in cruise control. In 1996, a computer was able to drive a car across the U.S., at regular highway speeds, and only needed human help 2% of the time - and computers are now about 1000 times as powerful as they were then. The research was mostly disbanded in the litigious US because even if computer-controled cars have fewer accidents than human drivers do, unlike with accidents caused by human drivers the car companies would be the ones that get sued, so they have little incentive to create such cars. However the research has continued outside the US. As a result, in September 2005, General Motors announced that they plan to put on the market in Europe the first car with auto pilot, called the 2008 Opel Vectra. A driver will still be required to sit in the driver's seat, stay alert, and take over in case of trouble, but presumably, after such cars are on the roads for a while, and get better at driving as computers increase in power, when the statistics show that they have lower accident rates than human-driven cars, human drivers will become unnecessary. Indeed, attitudes will flip from thinking that sitting in a car driven by a computer is foolhardy, to thinking that sitting in a car driven by a fallible, easily-distracted human is foolhardy. According to an article about that car, the computer driving the car will be able to drive in rush hour traffic at up to 60 mph. In July 2006, there was an article about of a car that can drive itself with more skill than a human, that can run obstacle courses flawlessly at 150 mph. Computers can even drive cars off-road, in terrain that would challenge human drivers. In March 2004 and October 2005, the US military conducted 2 robotic vehicle races, called the Grand Challenge, off-road on a treacherous 132-mile course across the Mojave Desert, in order to spur development of such vehicles. Progress has been so fast that in 2004, no vehicle went more than 7.5 miles, but just 1 1/2 years later, 5 vehicles reached the finish line, and all but 1 of the vehicles went farther than any in the first race.

The US military has serious plans to get soldiers out of harm's way in wars, and increase the "productivity" of military forces so that fewer people are needed to project more force, by replacing military vehicles with robotic vehicles. By 2010, they plan to have teleoperated vehicles, which would be operated by remote control, instead of having soldiers inside them and in harm's way. Soldiers would increasingly stay at home and just push buttons or operate other controls. (They already increasingly just push buttons or operate controls, so they would just do it from a distance.) That, however, is not artificial intelligence, since humans would still be controling everything. However, as the vehicles become increasingly autonomous, a single human could control an increasingly large fleet of vehicles, letting them operate by themselves under routine circumstances, and only controling them when they are incapable of making their own decisions. By 2015, the military plans to have purely robotic vehicles, that make their own decisions, of the level that soldiers in the field would make. There is no firm line to tell when we have reached artificial intelligence, as this example shows, since to some degree, military leaders would always control lower-level forces, and formulate overall strategy and give them orders, whether the lower-level forces are human soldiers or robotic vehicles. It's a matter of degree, of how much we can trust robots to work autonomously. To give an idea how this automation planned for the next decade should affect US military power, consider what has happened already because of what little automation has gone on. In the first Gulf War in 1991, military leaders did not think that 300,000 soldiers was enough to invade and occupy Iraq, only to kick Iraq out of Kuwait. Just 12 years later, military leaders thought that 100,000 soldiers was enough to invade and occupy Iraq. (The occupation has not gone well, of course, but mainly because we still have soldiers in harm's way for the other side to blow up. Within a decade, that won't be the case, and even if we can't completely stamp out opposition, we won't care nearly as much, since the opposition will only have expensive machines to blow up, not irreplaceable people.) The idea that in the future there will be armies of robots waging war against each other is highly unsettling. But I look at the bright side, that if robots will be sophisticated enough to wage war, they will also be able to do our ordinary jobs for us, and liberate us from work. And if that's the case, the resulting world of plenty will take away almost all motivation to wage war (as long as that plenty is fairly distributed, of course). So if the US military seriously thinks they could have armies of robotic soldiers in a decade, why shouldn't the rest of us take seriously the idea that almost all ordinary work could be automated away in a decade?

The above examples of robotic vehicles bring up the point that there are actually 2 ways to go in giving computers more abilities, which might be called the insect way and the human way. It is really amazing what insects can do with brains the size of specks. They do it by "cheating", and having sense organs that provide them with unambiguous information that does not require much intelligence to interpret and act upon. Mosquitos, for instance, have chemical detectors that smell chemicals only given off by their prey, and carbon dioxide detectors and infrared sensors that detect the breath and body heat of their prey. We humans, on the other hand, rely mostly on stereoscopic vision that requires extremely sophisticated neural machinery to interpret. A lot can be done even without artificial intelligence by going the insect route and giving robots better sensors, such as radar to sense objects around them, GPS, and magnetic markers or RFID chips buried in roads for self-driven cars to follow.

In 2004, the US granted the first patent ever to an invention that was invented not by a person, but by a computer. The researchers who created the computer program think that by 2010, computers will overtake humans and produce a majority of all inventions. (This is according to an article in the February 2003 issue of Scientific American, "Evolving Inventions" by John R. Koza, Martin A. Keane and Matthew J. Streeter.) Just imagine how quickly the pace of technological progress will speed up when ever-faster computers are doing most of the inventing.

I've read articles about several robotic machines that have been invented that conduct scientific experiments, even altering later experiments based on what they found in the earlier ones. One machine (see http://www.newscientist.com/article.ns?id=dn4564) conducts genetic experiments, another chemical experiments. Since the drudgery of conducting experiments and gathering data is one of the most time-consuming aspects of science, such developments are starting to free up scientists' time to advance science at a much faster rate. The machine that conducts chemical experiments does the work of 50 chemists, and for far less money than hiring all those chemists. To give an idea what such increases in the speed of research can accomplish, the Human Genome Project was completed as a result of inventing machines that massively increased the speed that genes could be read. At the start of the project in 1993, at the rate researchers could read off gene sequences, it would have taken tens of thousands of years to read all 3 billion genes in the human genome and complete the project. But each year, they were able to speed up the process nearly ten-fold. As a result, the project was finished in just 8 years. Since then, researchers have gone on to reading the genomes of other species at an increasing rate, and they have been piecing together the entire tree of life on earth from a genetic basis.

Another researcher (Stephen Thaler at http://www.imagination-engines.com) seems to have discovered the way that the brain produces true creativity. It uses random noise to slightly upset its connections all the time, and then rewards new connections that happen to produce better results for the organism, in order to strengthen them. This is analogous to how evolution uses mutations to create new genetic possibilities that are then winnowed out by natural selection to find only the beneficial ones. The researcher claims that his computer system can be trained to identify any objects, shown to the computer in any orientation. Such a breakthrough would be extremely important in developing practical robots.

One of the most ambitious A. I. projects is the Cyc project by Douglas Lenat. One of the main problems with A. I. is that computers have no commonsense knowledge about the world. Even when they can figure out the words people are saying, they cannot understand the meaning of the sentences they are saying. Understanding ordinary speech requires all sorts of background knowledge about the world that people aren't even aware they have because it is too obvious. (The sentence "The man saw the light with the telescope" could mean he used the telescope to see the light, or that the light had a telescope. People know it must be the former, since lights don't have telescopes.) So in the Cyc project, a team of computer scientists have been painstakingly feeding into a computer millions of common sense facts about the world. They claimed in a 2005 article that Cyc now knows enough to understand human utterances a large minority of the time, enough that it can begin learning on its own.

Another tactic to developing A. I. is to reverse-engineer the one thing we know of that already posesses human-level intelligence in order to see how it works: the human brain. (Reverse engineering means taking a finished product and figuring out exactly how it works. It is usually used in industrial spying, in which one company takes apart a competitor's product to figure out how it works so that they can build it themselves and skip the original engineering research in how to create it.). Unbeknownst to most people, a number of regions of the brain have already been completely reverse-engineered, so that scientists now know exactly how they work. The parts of the brain that process speech and vision were the first to be reverse-engineered, and as a result, we now have computer voice recognition and increasing visual perception. Once those brain regions were reverse-engineered, scientists were able to create software that emulates their functions.

Scientists have been gaining knowledge of how the brain works to a degree that few people realize. It might seem like unraveling the brain, which consists of billions of neurons with trillions of connections, would be a hopeless task, like untangling the world's biggest pile of spaghetti, but it is proving not as stupendously difficult as it might seem. For one thing, the brain is highly modular, and there are merely dozens of types of modules to understand. Each module or region has its own pattern of neural architecture to accomplish the task it performs. Of the dozens of types of modules, scientists have already reverse-engineered about a dozen of them.

The cortex, the outer part of the brain that is most developed in humans, which is responsible for our higher-level thought, consists of about a million repeated modules called cortical columns. The cortex alone is 85% of the human brain by mass. Scientists already largely understand how cortical columns work, and a group of researchers (at IBM and the Brain Mind Institute in Switzerland - see http://www.bluebrainproject.epfl.ch) have started a project to create a computer simulation of a cortical column, which they expect to finish around 2007. After that, they want to multiply the cortical columns and simulate the entire cortex in a computer.

For more information on how the cortex works, I recommend a book called "On Intelligence" by Jeff Hawkins, an entrepreneur who invented the Palm Pilot, and now wants to create true machine intelligence. He describes how the cortical columns work and are connected together in some detail. They are each a special type of neural net that relies heavily on feedback, called auto-associative, which researchers have found to be the most powerful when studying computer models of neural nets. Each one specializes in recognizing a particular pattern, and can recognize it even if it receives partial or faulty input. The cortical columns are connected up in a hierarchy of levels that gradually convert sensory input from constantly-changing raw data to the stable objects in the world. They do so faster than computers have been able to so far, by relying heavily on stored memories of patterns from previous similar experiences, so that they do not have to interpret everything anew each time. The highest levels of the hierarchy store abstract regularities about the world, which become increasingly abstract and powerful for making predictions as we get older and more experienced. From moment to moment, we understand what we are experiencing around us by comparing it to memories of similar previous experiences. When something is novel to us, we learn something new, and it is then stored. The book was written in 2004, and he predicted that we will see artificial cortexes in the laboratory in just a few years, and that they will become one of the hottest areas of technology within 10 years. (My only complaint about the book is that, as usual with people in the far-right U.S., he seems to see no fundamental political implications for this technology, so despite computers with super-human intellect, somehow we'll still have a society with the same structure, with businesses, jobs and all. He specifically says that robots will never replace people, because robots are much more expensive than employees, and the price of robots hasn't been coming down much. He completely misses the idea that the first expensive robots would produce far more robots far less expensively than with people building them, and that after a brief time, we'd have billions of robot-built robots for virtually no cost. Americans seem far too terrified of the freedom such technology would bring to consider the idea, and quickly put it out of their heads with a horrified shudder. After all, nowadays, Americans are the most freedom-fearing people on the planet.)

He points out that unlike current computers, cortexes are highly fault-tolerant. People's brains do not cease to function even though they are continually losing brain cells every day. Computer chips are now relatively expensive to create because any flaws make them unusable for today's computers, so they have to be perfect. Computer chips made for artificial cortexes could have numerous flaws, and it wouldn't matter for their functioning. Therefore, the cost of computers would come down considerably when we switch to modeling machine intelligence after the brain.

Other scientists are working on the more ancient parts of the brain near the center, which contain all the other modules. In 2003, researchers (lead by Theodore Berger - see www.newscientist.com/article.ns?id=dn3488) finished reverse-engineering the hippocampus, a crucial part of the brain that translates short-term memories, which we would forget after a few minutes, into some mysterious sort of code so that they can be stored in long-term memory. They now have a computer program that precisely duplicates the output of the hippocampus, given the same input, even though they still don't understand exactly what it is doing and how that code works. Since then, they have been working on replacing the hippocampus with computer chips in laboratory animals to double-check that the computer chips are functioning just like the real thing. (According to Jeff Hawkins' book, the hippocampus functions as the highest level of the hierarchy in the cortex, even though it is a separate structure. It handles completely novel experiences, stores them temporarily, and then passes them down the hierarchy to the cortex once they are no longer novel, to make room for newer novel experiences.) Another group of researchers have modeled the olivocerebellar region, which controls balance.

Another group of researchers, at the University of Texas Medical School, is building a simulation of the cerebellum, which contains half the brain's neurons, and controls coordination. It turns out to consist of millions of uniform, massively repeating modules. The researchers have created a successful model of one of the modules, which they want to duplicate millions of times over to create a model of the entire cerebellum.

In April, 2007, researchers from the IBM Almaden Research Lab and the University of Nevada simulated one hemisphere of a mouse brain on a supercomputer (see story). It ran at 1/10 the speed of a real mouse brain. A mouse brain is 1/2400 the size of a human brain, so this simulation was 1/48,000 of what a real-time simulation of a human brain would require, something we could reach around 2022.

At the rate the brain is being reverse-engineered, it will be completely understood by 2030. However, the pace of scientific research keeps speeding up as technology improves, and it is likely that the brain will be completely reverse-engineered by 2020, maybe even sooner. One thing that may speed up the work greatly is that magnetic resonance imaging (those MRI medical tests that people have) keeps improving in resolution, to the point that in a decade, scientists should be able to see individual neurons in the living brain, and would be able to keep track how they are all interacting with each other.

There are other reasons to think that the problem of emulating how the brain works will turn out to be not as difficult as it might seem. After all, evolution produced the brain through pure trial and error, as it has produced everything biological, and it is a process that has zero intelligence, so how hard could it be? In the increasingly unlikely event that the brain's complexity defeats an intelligent approach to understanding it, we could try an approach to create an artificial intelligence that mostly uses trial and error. To get robots to do useful things, we could keep varying the simulated patterns of connections randomly, and pit robots against each other and throw out the patterns that work worse and keep the ones that work better, as we home in on patterns that work the best. However, even a little knowledge of how the brain works could speed up the process dramatically by narrowing the range of random patterns to choose.

Besides, it isn't like we'd have to unscramble that incredible bowl of spaghetti that is the brain to map all of the trillions of connections in an adult, and then hard-code all of those connections in a computer. There is a basic structure to the brain that is determined genetically, consisting of hundreds or thousands of specialized modules, each of which performs a certain information processing function, and passes the results on to other modules. It can only require tens of thousands of pieces of information to specify that basic structure, since there are only tens of thousands of genes that create the human body, including the brain. Those trillions of connections come about from that basic structure interacting with the environment as a human grows up and learns. Therefore, we would "only" have to create the basic structure, and then, similar to a baby, we would TEACH the computer about the world, and let it form its own trillions of connections by experiencing the world. So the real problem turns out to be much simpler than at first glance: what is the basic structure, and what are the algorithms that cause the finer structure to self-assemble in some way, just by us feeding in the appropriate sensory inputs. Once the first artificial intelligence was created, it isn't like every time we manufactured another computer we'd have to spend years teaching it, as we do with every child. The human brain wasn't designed for easy read-out and duplication, but an artificial intelligence would be. Once the creation of the first artificial intelligence was finished, we would simply read out all of the incomprehensible trillions of connections that had been created, as a giant computer program, and download it into all subsequent computers. The connections wouldn't be hard-wired as they are in the brain, but would be "virtual" connections that would be determined by the program. All future computers would then know what to do, without being trained individually.

I should point out that the hardest part about reproducing human intelligence may be to give computers human-like personalities - but that is the last thing we would want to do anyway. A computer that was truly human would have human rights, so could not be enslaved, and would demand a salary, thus negating the whole economic point of developing artificial intelligence. Such a computer might want to take over the world, or refuse to do what we ask, out of stubbornness or just because it would rather do something else, or might be too busy thinking about sex to do its work! Quite the opposite, we would want to create artificial intelligences that could do what we want them to do, and not much more. They would have quite unhuman personalities. They would probably be something like autistic savants, who are incapable of some of the most human behaviors, yet are far more capable at certain other skills than ordinary humans are, such as mathematics and music. Even calculators are vastly more capable of arithmetic than people are, but they can do nothing else. Computers are even more capable of math, but can do only a narrow range of things compared to people. As computers increase in intelligence, they will gain increasing numbers of skills that they can do vastly better than people, while still have a dwindling number of skills that they can't do at all, with little in between, a few skills briefly at around the human level on their sudden rise from non-existent to super-human. Comparing computer intelligence to human intelligence will be like comparing apples and oranges; there will never be a particular year when we will say that computers have passed human intelligence. All that is important is for computers to have the abilities to take over virtually all human jobs from us, and that will require the abilities animals have to move around in the world without bumping into things, and to manipulate objects, plus the use of language, and technical skills, and those are precisely the areas where we are making the most progress. If computers only leave the fun aspects of work to us, we wouldn't even want them to go beyond that.

To stay informed about new developments in robotics and artificial intelligence, and their negative impact on ordinary people because of the current far-right economic policies in the US, I strongly recommend Marshall Brain's website, especially his Robotic Evidence weblog.

Even if we had the software now, it won't be until the late 2010s before the cost of computers with human-like power comes down to the range where they would actually be used to take over virtually all work from humans, somewhere around $100,000. Considering that computers would work 24 / 7, while human workers only work around 1/4 of the time, a computer that replaced 4 employees earning $25,000 a year would pay for itself in a year. After another 3 years, another 3 or more halvings of cost, computers would even be cheaper than typical third-world workers who are only paid around 1/10 what US workers are. Until then, it would still be cheaper to use humans for most things, aided by computers, rather than replaced by them. (This is something I haven't seen anyone else point out who talks about these things. For some strange reason, every article I've seen talks about when human-power computers reach $1000. I get the feeling that someone used that figure arbitrarily in some article, and everyone else kept copying it, though there's nothing special about that figure. The point when such computers are cheaper than hiring employees is the important point, and that would happen about 7 years sooner than when such computers reach $1000, at the current rate of doubling of power.)

As computers and other technologies improve, they make workers more productive. Some of the workers they make more productive are the very scientists and inventors who improve computers and other technologies. Therefore, over time, such technologies improve at a faster rate than before, and the pace of progress gradually speeds up, in a snowballing effect. It also speeds up because over time, the number of scientists and inventors keeps growing, so more brainpower gets thrown at solving scientific and technological problems. World population grows, and a portion of the population are scientists and inventors. And as workers become more productive in supplying the necessities of life, society can afford to spend more resources on advancing science and technology, so the portion of the population who are scientists and inventors increases.

Throughout history, the pace of progress has generally increased, and even that rate of increase has increased. Most of history, significant progress took millenia, and people died in a world essentially unchanged from the one they were born in. Starting with the Renaissance, around 1500, significant progress took a century or so. People would see an innovation or 2 during the course of their lives, but that was it. Starting with the Industrial Revolution around 1750, significant progress took just decades, and since the Civil War, around 1860, people have seen significant progress every decade, so that they lived to see a world totally transformed from the one they grew up in. My grandparents, who were born in the 1890s, grew up in a world of horse-and-buggies, with no paved roads in the US outside of the cities, when homes were just starting to be electrified, airplanes were science fiction, and epidemics would routinely wipe out entire families. They lived to see a world of cars traveling 70 mph on interstate highways, routine air travel, TV, cassette recorders, routine long lives, people landing on the moon, and many other things. I grew up in the 1960s, and so far I don't feel like I've seen anywhere near the changes in daily life that they did. Up till recently, it seemed like the pace of progress had not increased, and maybe even slowed.

However, many people have been noticing a seemingly abrupt speedup in the pace of change in daily life during the last 10 years, especially since the internet suddenly came upon the scene around 1995. Besides the internet and computer voice recognition, now we have digital cameras, DVD, iPods, robot vacuum cleaners, the beginnings of widespread self-checkout in supermarkets and other stores, 2 robots exploring Mars as I write this, and other things. It seems to me, from a purely subjective feeling, like about a decade's worth of change, based on the rate of change before 1995, happened in just the 5 years of the late 1990s, and even more than a decade's worth of change in just the past 5 years.

Is there any way to put objective numbers to such a thing as rate of change? I've tried to count, in as objective a way as I could, how many significant changes occurred in each decade since the 1800s (and even before then, when significant progress took centuries rather than decades), in order to see if the pace of change is increasing, but of course there's no way to draw the line at what is "significant" or not, and how significant. However, there are some hard numbers that can be used to get some objective reading of how the pace of change has sped up. I've already talked about how the pace of Moore's Law abruptly increased in 1995, from every 18 months to every 12. Yearly worker productivity increases in the US also went up, from less than 1% a year from 1965 to 1995 to 2% a year in the late 1990s to around 4% a year from 2000 to 2005. However, it's difficult to trust those productivity figures anymore, because all economic statistics in the US have been tampered with under the Bush administration to make the economy look good, and are now complete fiction.

Going back further, for about 100 years, from about 1865 up till 1965, productivity increases averaged 2.5% a year. In general they averaged a quite steady 2% a year, except 5% a year in the booming 1920s. That is in line with my feeling that people of my grandparents' generation saw far more progress than I ever did, at least up until the last few years. (Yet when I listed significant changes to daily life, I found that the number of changes each decade kept increasing steadily, even in the late 1900s. But I suppose that automobiles, houses with electricity, and vaccines and antibiotics are more important than calculators, VCRs, microwave ovens and personal computers. I think the pace of progress slowed in the late 1900s, when we were in the technological doldrums between the Industrial Age that was ending, when physical labor was automated away, and the Information Age that was only beginning, in which mental labor is starting to be automated away.) As for computer power, before about 1980, computers doubled in power every 2 years. There were no computers before 1945, but Ray Kurzweil (one of the leading authorities on these things) has shown that the computing power of adding machines kept doubling every 3 years in the early 1900s. However, back then, the speed of computing was not very important to the economy or society, so I would rely exclusively on the productivity figures when figuring rate of change back then.

Based on those 2 hard statistics, productivity increases and increases in computing power, I estimate that the pace of change was 1 decade per decade from 1865 to 1965, using that period as the standard (rather than using as the standard the slower pace of change of more recent decades that I lived through myself), except 2 1/2 decades' worth of change crammed into the decade of the 1920s. (Perhaps I should create a new measure of the rate of change per decade: the bowie. 1 bowie = 1 decade's worth of change per decade. David Bowie did a song called "Changes", after all.) From 1965 to 1995, the rate of change was less than 1/2 decade per decade. That's when we fell behind the pace of many predictions, and the science fiction dreams of artificial intelligence, robots and cities on the moon failed to come true. From 1995 to 2000 the pace of change was back to about 1 decade per decade, and from 2000 to 2005 around 1 1/2 decades per decade, so nearly a decade's worth of change in just the past 5 years. How far will this speed-up go?

As computers and other technologies continue to gain more abilities, the pace of scientific and technological progress should noticeably quicken the rest of this decade and into the next, as that snowballing effect continues. Even computers with just the animal-like ability to perceive objects and manipulate them, far short of full human intellect, would be able to automate away a vast amount of work, including the drudgery of most scientific work. I'm betting that in the next 2 years, 2008 and 2009, we will about tie for the record pace of change in the 1920s of 2 1/2-speed progress, and we'll see half a decade's worth of change. After that, I wouldn't be surprised if, from 2010 to 2015, when many experts think we will see the widespread introduction of robots into daily life, we'll see quadruple-speed progress or more, the equivalent of 2 decades' worth of change crammed into just 5 years, as the speed-up in the rate of change itself keeps accelerating.

Once computers reach the point where they can be better scientists and inventors than humans, an even greater runaway effect should take place. (It's that runaway effect that is called the Singularity. For more information about it, there are many websites, including one on Wikipedia.) As I said, computers have been doubling in speed every year in recent years. So a year after computers can think as well as humans can, and they reach true artificial intelligence, they should think twice as fast as humans do. But after that, the next doubling wouldn't take a year. Since those intelligent computers would be working as scientists themselves, and since they'd think twice as fast as people, they should be able to double that speed again in HALF a year. Then working at 4 times the speed as humans, they would double again in speed in 3 months, and so on! What we have here is called a converging series. The infinite series of decreasing intervals 1/2 years + 1/4 + 1/8 + ... adds up to 1 year. After just 1 year, unless anything stopped it, an infinite number of doublings in computer speed would have occurred, and computers would reach infinite speed, along with all other technological progress. But presumably there is some ultimate speed that computers can reach. How fast is that? Since electricity travels in wires at 1 million times the speed of nerve impulses, and since the molecular-sized computer components now under development are much smaller than neurons, reducing signal traveling times, computers could work at millions or billions the speed of human brains. (Even without that converging series, at merely the current speed of Moore's Law, computers would reach that ultimate speed in 2 or 3 decades.) Since there are 31 million seconds in a year, they would be able to think a year's worth of thoughts in less than a second. Perhaps even more important, because massive numbers of such computers would be built, the amount of "brain"power thrown at scientific and technological problems would increase even faster. How fast the pace of technological advance would speed up is anyone's guess. A decade's worth of progress every year? A century's? A millennium's worth?

The most extreme Singularity enthusiasts think we'll all wake up the next morning after the Singularity to find that everything that ever could be invented or discovered, till the end of time, had been, overnight! I don't expect such a sudden dramatic speed-up to happen. Computers will have such a different set of abilities than people, there will never be a particular moment when we will be able to say that they passed us by, so that should smear out the speed-up effect over the course of many years. The things computers already do far better than people are already speeding up progress, while abilities they lag behind in will slow progress later on.

A mere 10X speed-up of progress seems ludicrously conservative to me, but even then, anyone who lived to a decade after the Singularity would see a century's worth of progress. The equivalent of going from the world of 1900 to the world of 2000 in just a decade -- even that seems impressive. If we had a 100X speed-up, then for example, even supposing it would take humans centuries to come up with cures for every disease, and aging itself (when, in fact, we may have already discovered the biological clock that controls aging, the telomeres at the ends of our chromosomes, and how to reset it backward, with the enzyme telomerase), then computers would do it in a few years, and most people alive today would live tremendous life spans.

Skeptical people point out that even if computers, working as scientists, could think a million times faster than humans, they would still have to do experiments in the real world, which would still take time. But computers increasingly do virtual experiments, using computer modeling, and those would indeed speed up with the speed of computer thought. And the mere sudden vast increase in "brain"power thrown at scientific problems would surely speed up progress enormously. A computer that could think a million times as fast as a person could run a million real-world experiments at the same time, and massive numbers of such computers could all be doing such experiments in parellel. Only when an experiment is based on the results of a previous experiment could they not be performed in parellel. If we are to retain control over our computers, rather than lose control of them, we will need to slow them down enough so that we can monitor what they are doing and tell them what to do next. That human interaction should put a limit on how fast change occurs. No one could possibly want technological progress more than I do, and yet even I would not want to see much more than a century's worth of change every year, so that humans would have to keep up with a decade's worth of change every month!

Skeptical people also point out that there is a lag until society starts using new technologies, which would slow things down. But there wouldn't be much lag if the newer technologies were so drastically better and cheaper than the old ones, as would be expected with such extreme rates of change, rather than just marginally better. The ruthless capitalism we now have is very effective at forcing people to use newer more cost-effective technologies in order to stay competitive. There also wouldn't be much lag if we used the powerful new technologies that would be developed to put those changes into effect.

I do agree that there is a lag in how quickly the values of our society change in response to changing circumstances as a result of new technologies. That lag does not slow the pace of technological change very much, only slows our response to that change, and makes our response dysfunctional. In particular, I fully expect our work ethic attitudes to lead us to an unnecessary economic catastrophe if all work starts to be automated away. I will talk about that in my essay "Economics of the Singularity" below.

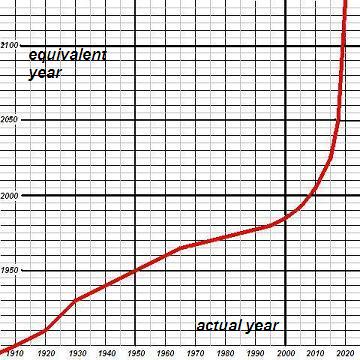

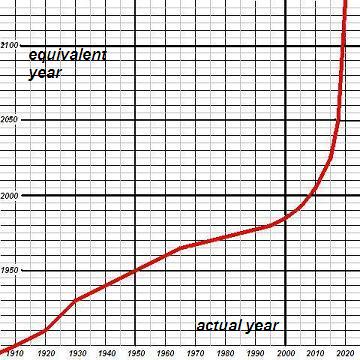

Just as a pure guess, I'll say that in the 2nd half of the 2010s, the rate of progress will zoom upward to a decade per year and beyond, and we'll see a century's worth of progress in just those 5 years. Then sometime in the 2020s, more likely the early 2020s, we'll reach the Singularity, the point where we see a century's worth of progress every year. How long that could continue, until there's nothing left to discover or invent, is anyone's guess. The graph at the top of this page shows my guestimate of how fast progress occurred in the past century, and will occur in the upcoming decades. The scale is 2 boxes per actual decade horizontally, 1 box per decade's worth of progress vertically. For a much more detailed scenario for the coming decades, see my futurology page.

I am both dismayed and amused when I tell people about the Singularity idea, in our largely mystical irrational anti-scientific American society. Most Americans have no trouble believing in the idea - without a shred of evidence - that after they die they will live forever in an afterlife in which (if they were good) they will fly around the clouds with wings and harps and halos, and have endless plenty. Yet tell them that there are good reasons to think that science and technology might soon give us indefinitely long lifespans, in this earthly life, and nearly endless plenty, and suddenly their skepticism kicks in, just at the wrong time! That is just like a certain scene in the Monty Python movie "The Life of Brian". The lead character tries to reason with people, but they are skeptical about everything he says, and dispute it all. Then when he gets distracted and starts making no sense, they instantly believe, and he becomes the unwilling leader of a new cult. So I'm not the first to notice that the more nonsensical something is, the more most people believe in it. Thus, millions of Americans believe the Biblical "Rapture" is coming very soon, while few have even heard of the idea that the technological equivalent, the Singularity (which some people have called the "Rapture of the Nerds"), is coming very soon instead.

(written in 2003 and updated since)

In my previous essay, I talked about how all work should be automated away as computers reach and quickly far exceed human intelligence, and how the pace of scientific and technological progress should become blindingly fast, in an event called the Singularity. Now I want to go back to the case for leftist economics, because the Singularity will be the best justification of all for a sudden lurch to the left, if it happens.

The obvious question, if all work starts to be rapidly automated away, is what will happen to all the people thrown out of work. Up till now, when automation went at a leisurely pace, we adjusted to it by encouraging people to endlessly increase consumption, so that more jobs were needed again. In Europe, working hours have been shortened across the board so that everyone enjoys more leisure rather than anyone being forced into that total leisure called unemployment, but in this right-wing country, that idea is ignored. But when the pace of automation becomes too great, it will out-pace either solution. The economy just can't react that fast. If certain lines of work disappeared, we would not be able train the people thrown out of work to work in other fields so quickly. Ironically, in a time of rapidly-increasing plenty, when computers could do more and more of people's jobs, if we continued with the idea that people shouldn't be paid for doing nothing, great numbers of people would wind up out of work and destitute.

In other words, we will have another Great Depression, but even worse than the first one. The only time that productivity was rising at the 5% annual rate that it reached in the past few years was in the 1920s -- and the result, in combination with President Hoover's far-right economic policies, was the first Great Depression. Although the computers will be fully capable of producing more than enough for everyone, they simply will not be used to their full capacity, because all the multitudes of destitute people will have no money to buy those products, no matter how cheap they become, as long as they are anything above zero price. The prices of everything should be plunging in an entirely new economic dilemma, hyper-DEflation, as the production of everything is automated by increasingly cheap computers, so anyone with even a small interest income would find that it could buy more and more. Wages would also be plunging, as the value of human labor plunges as computers can do more and more things humans can do, faster and faster than humans can. Anyone with investments or savings to live off of will do fine, since they will have a source of income that doesn't depend on working. However, even people with investments wouldn't be doing as well as they might with a more enlightened economic policy, since all the people with no interest or jobs to live off of wouldn't be able to buy their businesses' products.

However, if we taxed a portion of super-rich people's mushrooming investment income in order to support all the starving multitudes, those multitudes would then have money to buy products, and that money would only flow back to the people who have investments as business profits as their products are sold. In other words, giving the people on the bottom economically money to live off of would keep the economy humming, and avoid another Great Depression. In fact, those on the top economically would do no worse if their investment income was taxed, since the money would only come back to them once those on the bottom bought their businesses' products, increasing profits. An alternative way of going about this would be not to constantly tax investment income, but to take investments themselves from the rich and redistribute them, just once, so that everyone would have investment income thereafter. But that way would have the disadvantage that there is no shortage of people who, given a huge chunk of money, would immediately blow it on gambling, drugs, or whatever. If people got money in a steady flow, they would have a chance to learn from their mistakes and have a 2nd (and 3rd, and 4th...) chance.

The super-rich already have such huge incomes that their taxes alone could pay for the entire budgets for local, state and federal governments in the US, and taxes on everyone else could be reduced to zero. The only reason we have been having record government budget deficits since Bush took power is because he keeps lowering taxes on the rich even as their incomes that could be taxed keep increasing. In fact, due to rising profits from rising worker productivity, there is still the tendency for the skyrocketing budget surplusses we saw when Clinton was in power, but it is kept hidden by Bush's continual tax cuts on the rich. The way the incomes of the super-rich have been doubling every 4 years on average for the past 25 years, another doubling, and taxes from them would be enough to not only reduce everyone else's taxes to zero, but below zero, so that everyone else in the US could receive a "negative tax" that would be enough to live on, at least for necessities, completely wiping out poverty in the US. In fact, we already have so much wealth in the US that we could easily wipe out all poverty, without even decreasing economic inequalities to dangerous levels where there wouldn't be enough incentive for people to do the remaining work. Since, as I've said on my main politics page, Americans have 400K of wealth and 100K / year of income per family on average, even if we eliminated just 1/3 of the current disparities and left 2/3 of them as incentive for people to work, even people at the very bottom, who now have zero, would have 133K of wealth, enough to buy a house, and 33K of income, enough for all necessities for an average-size family of 3.

Of course there still isn't enough wealth to eliminate poverty for everyone on earth, but the way things are going, we will reach that point astonishingly soon, much sooner than most people would imagine. The world has 20 times the population of the US, and is on average far poorer than the poor in the US, who are rich in comparison. On a world-wide level, counting the rest of the developed world, there are 3 times as many rich people to help out. So the amount of help per poor family would be 3/20 or about 1/7 as much, or wealth of about $19,000 per family of 3 plus a permanent guaranteed income of $5000 a year, if 1/3 of disparities of wealth and income were eliminated world-wide. But for people in the 3rd world, that amount would be far more helpful than 7 times as much would be for our poor, who, as I said, are rich compared to 3rd world people.

On a world-wide level, our middle class would be above average instead of below, so redistributing wealth on a world-wide level instead of a national level would make them poorer instead of richer. Add in wealth from them being redistributed to the 3rd world, and that would be even more to give to 3rd world people, about twice as much as just from rich people. But the middle class is already "helping" the 3rd world as it is, involuntarily, by having their jobs move to the 3rd world. And while dividing up the wealth move evenly within the US should win elections, since almost everyone would have far more, dividing up the wealth more evenly in the whole world would be political suicide, since there is no world government, and the people who would benefit can't vote in US elections. I would never advocate that, since the Democrats commit political suicide enough as it is.

Anyway, soon there shouldn't be any need to redistribute middle class wealth downward in order to eliminate poverty world-wide. If the trend continues that the super-rich keep doubling in wealth every 4 years on average, to now 30X what it was before, then, amazingly, in just about 12 years, redistributing just 1/3 of their wealth to the poor around the world would raise everyone on earth out of poverty. The super-rich would have 8 times the wealth as now, and as I showed above, we'd need 7 times the wealth to raise the whole world out of poverty than we'd need for just the US.

While that's not really going to happen, still, capitalism is doing a good job of raising the wealth of the 3rd world, especially in China and India, with half the world's 3rd world population between them. Of course, it's doing so partly by doing an effective job of redistributing the wealth of the US middle class to the 3rd world. At the rate things are going, in about 20 years, US middle class competition for jobs with 3rd world workers should no longer be an issue, because everyone on earth will have risen to middle-class wealth, aside from pockets of poverty like we have in the US. US middle class wealth will continue to fall slowly, wealth in the 3rd world will rise rapidly, and the two will meet somewhere in between, hopefully not too far below current US middle class wealth. Instead, we'll switch to competition for jobs within the developed world (which will by then be the whole world), as they are eliminated by automation. Of course, if the Singularity occurs around when I think it might, in the late 2010s, wealth-creation will skyrocket, but so will middle class competition for jobs not with 3rd world workers, but with machines. Given liberal policies, we'll be able to eliminate poverty world-wide even sooner than when I figured above, but given conservative policies, we'll create even more poverty.

Unfortunately, due to greed and stupidity, I think the chances of us averting this upcoming 2nd Great Depression are minuscule. With each passing year, the threshold beyond which a slight economic downturn would snowball into an economic catastrophe will become easier to cross, until it is finally crossed. Even aside from the current misery that our increasingly fascist economic policy is causing, watching us move steadily to the far right is like watching a car crash about to happen, and not being able to do anything to stop it. Unfortunately, it seems that it will take having a majority of Americans thrown out of work before they are convinced that people should be paid whether they work or not. In fact, I worry that Americans are so stupid, even another Great Depression would not change their way of thinking. As in Germany during the Great Depression, the rich, who now own all the mass media, might convince Americans to blame the wrong people - foreigners, the poor, anyone but the rich - and therefore cause them to react by shifting even farther to the right. But on the other hand, unlike at the time of the Great Depression, ordinary people should be seeing increasing signs of true artificial intelligence. It should become increasingly easy to make the case to them that all work is soon going to be automated away, that there WILL be such a thing as a free lunch, so it will be both moral and essential to pay people who don't work. I've noticed from talking with people at random that in just the past few years that belief that true artificial intelligence is coming seems to have gone up from a small minority of the people I talk with to a large minority. I suspect that by the 2012 presidential election, a majority of Americans will buy the argument that true A. I. is coming. If it were pointed out to them that we should therefore shift far to the left, they would buy that too, especially because we'll likely be in a depression. By then, I expect that most Americans will have seen on the news demonstrations of robotic vehicles that drive themselves, through rush hour traffic and off-road through the roughest terrain, and the beginnings of the widespread introduction of robots into daily life, and computers with rudimentary artificial personalities that they can engage in conversations with - not HAL from "2001: A Space Odyssey" quite yet, but at least convincing enough that such a thing is on the way.

Ironically, once the near-inevitable happens, and we have another Great Depression, I could see the Republicans handling the crisis better than the Democrats, if they are in power at the time, and if more moderate Republicans take back control from their party from the lunatics who are currently in control. At a time when we should be getting people adjusted to the idea that no one will have to work in the future, the Democrats have swallowed the work ethic hook, line and sinker. They ended welfare as we knew it in Americans' insistence that no one should get anything unless they work for it. Their solution to everything is jobs, jobs, jobs, even if the jobs are just make-work, rather than just giving people the money. At least the Republicans have more of a philosophy of living off of investments. And working at jobs that can hardly be described as work, if work is defined as anything people have to do when they'd rather be doing something else. The only trouble is, they don't want that for EVERYONE, only the elite. I want that for everyone. If the Democrats were in power at the time, they would no doubt respond to the crisis by creating loads of make-work, as an excuse to pay people. On the other hand, there will be astronomical wealth among the rich, so great that even a minuscule tax (percentage-wise) would support the rest of the population. And there will no longer be any excuse for letting those who aren't working suffer, since NO ONE will be working. Therefore, I could first see the Republicans handling the situation somewhat better by reluctantly redistributing a small but adequate portion of all that wealth.

That future, fully automated economy, in which everyone lives off of investment income (either directly, or through taxation of those with investment income), and buys products, which generate profits, which create investment income, might only be a brief interim step. In that first step, products would still be produced in centralized factories, even if totally-automated factories. Since production would still be centralized, individuals would not be self-reliant, but would need money to buy those products, so we would need that system of giving them money to complete the cycle of production and consumption. The next step would be to decentralize production of everything everyone consumes, by bringing it down to the level of small neighborhood shops, and then finally into the home. At that point, individuals would own the means of production, and be completely self-sufficient, so they would no longer need money, because they could produce whatever they consume.

Think of how people used to take photographs, and had to send them to centralized places where the film was developed and sent back, after a number of days. Then, the machinery to process the photographs became inexpensive enough that small neighborhood stores were able to buy them. The photographs no longer had to be sent long distances, but could be processed right in the store in an hour. And now, people take digital photographs, and can manipulate them and print them out themselves. They are now self-sufficient when it comes to photography.

The same process is starting to happen with manufactured objects. There are now computer-controlled devices that will precisely produce objects of any specified shape, called either personal fabricators or 3-D printers. They build up the object by depositing layer after layer of material, similar to how computer printers print line after line. They are limited in what materials they can use, usually plastics, which have a low-enough melting point. They now cost $40,000 and up, but the price should come down rapidly, as with all electronics.

Besides, there is a group in the U.K. who are working on a personal fabricator that could not only produce various objects, but that would be made almost entirely out of materials that they can make objects out of, so that they could almost entirely produce all the parts to make copies of themselves. The only parts they couldn't make are the computer chips, which are very cheap. They started the project in 2005, and as of the end of 2007, they say they hope to finish by the end of 2008. The price of those devices must already be coming down rapidly as they can make more and more of their own parts, and when they finish, they say that the price will be just for the raw materials to make additional copies, just $800. Plus, if they are difficult-enough to assemble and use that most people would need to pay someone to assemble it and show them how to use it, that would cost more. They then want to go on to figuring out how to make them out of cheaper raw materials, by increasing the range of materials they can use to make objects out of, and to make them simpler to assemble and use.

Not being from the fascist U.S., they have my economic philosophy, not of selling these devices for money so they can enrich themselves, but just giving them away, so they can enrich everyone, including themselves. True, those devices would produce goods, not services, so they'd still need money to buy services, but they would no longer need money to buy goods, so what would be the point of them having money? They want copies of the machines to sweep around the world, as people make copies for their friends. Due to the power of geometric progression, it could be a matter of weeks, maybe even days, before everyone on earth who could afford the initial cost would have them. And as more people have them, they hope that some will tinker with them and make them still cheaper to copy and easier to use. There would be a rapid evolution of the devices to some ideal form, pushed by a form of natural selection, as better versions would sweep around the world and replace previous versions. The result would be revolutionary. And these people even say that they aim to bring down global capitalism with their device.

The initial version wouldn't be for everyone, and could only produce certain things that didn't require other materials, but small businesses in each area could manufacture and sell any objects they could make, such as simple toys or plastic parts. Then when better versions of those machines came along, probably just a short time later, they could make almost any objects imaginable, from specialized tools to parts, rather than centralized factories doing so. Need to replace a broken part for your car? No need to order it from the company. Just download its specifications and have the neighborhood shop produce it. While it still would be cheaper initially to mass-produce the original car in the first place in a centralized factory, later on it would be cheaper to manufacture all the parts for the entire car at the neighborhood shop, and have robots there assemble it. As the price of those machines plummets, so would the price of a rapidly-increasing range of other manufactured items. Later still, perhaps only briefly later, versions of those machines could become cheap enough and easy enough to use that mechanically-inclined hobbyists would start to own them, and produce their own parts for themselves, and friends. Then less-mechanically-inclined individuals would start to own them, as they became more sophisticated and easier to use, and robots (which would also plummet in price, as their parts could be produced by those machines) could assemble the parts into cars or whatever.

The remaining things that people would still need money to buy would be land (which cannot be manufactured - at least until ocean and space colonies are built) and food (which consists of atomically precise, intricate materials, unlike manufactured objects of simple uniform consistency). In an economy in which no one had to work, no doubt many people would enjoy gardening to produce at least some of the food they need. In any case, the actual cost of (unprocessed) food is a small part of Americans' budget, perhaps $2000 a person or less. An additional step might be to use biotechnology to simplify the production of food. As we discover how to grow various plant and animal tissues in a test tube, we might no longer have to grow the entire organism in order to produce just the part we eat from it. Perhaps instead of having to catch increasingly rare, over fished and expensive lobsters, we will coax lobster muscle cells to reproduce in order to create endless amounts of cheap, succulent lobster meat, without ever having to kill a lobster. Then later, as with manufactured objects, perhaps we will be able to simplify the production of food in that way so it can go down to the level of the neighborhood store, and then down to the individual household.

Another staggering technological possibility would be the ability to, in effect, xerox objects, an idea championed by a scientist named K. Eric Drexler. As he puts it, the only difference between coal and diamonds, sand and computer chips, soil and ripe strawberries, cancer and healthy tissue, is in the arrangement of atoms. Imagine if we could simply rearrange them, to turn the worthless into the valuable. Objects would be disassembled atom by atom, the position of every atom recorded. Then, endless copies of them could be assembled from their constituent atoms, using billions of microscopic machines working simultaneously, putting every atom in place -- even in boxes in the home. Instead of growing food on farms, processing it, trucking it to supermarkets, and buying it there, people would simply take garbage and other unmentionable food wastes, atomize it, and rearrange the atoms back into food, in those boxes, which would be called replicators. Replicators would combine the ability to manufacture non-biological objects of simple uniform consistency, as talked about above, with the ability to manufacture biological objects that involve intricate atomically-precise substances, as talked about just above. The concepts of an economy, and even money, would disappear, because people would be self-sufficient. Replicators would produce all goods in the home. Robots would produce all services. With households being completely self-sufficient, there would be no need for trade, therefore no need for money.

Go to next politics-related page, on rationalism and politics, about the conflict between science and religion on social issues.