Augmented Reality

Augmented Reality

Augmented Reality

Augmented Reality

Augmented Reality (AR) is a relatively new research field. Its basic concept is to place information into the user's perception, registered with the environment. In most cases, this means to place visual information (computer graphics) into the user's field of view. One of the main challenges of AR ist to keep these artificial objects registered to the real world, so that they appear to the user as fixed to the environment. About 50% of the current work in AR is devoted to develop tracking approaches that provide low latency, high accuracy, and that are not too cumbersome for the user to wear. AR is not only applied to the visual sense - all other senses can be augmented as well by AR means.

This page is way too short to give a comprehensive overview on the field of AR. The interested reader is advised to do a web search for "Augmented Reality". Here on these pages, I will only present my own work in the AR domain that I have done since 1997.

One of my technical interests in the AR domain is to apply

computer vision methods for obtaining tracking and registration.

For use in an indoor environment, I designed a set of circular markers that could be placed

in the environment and provided visual anchors for registration.

In connection with V.Sundareswaran's Visual Servoing approach, we could achieve a very fast

tracking, and we patented this specific concept.

One of my technical interests in the AR domain is to apply

computer vision methods for obtaining tracking and registration.

For use in an indoor environment, I designed a set of circular markers that could be placed

in the environment and provided visual anchors for registration.

In connection with V.Sundareswaran's Visual Servoing approach, we could achieve a very fast

tracking, and we patented this specific concept.

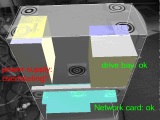

In order to demonstrate this ring tracker in conjunction with the Visual Servoing approach, we build a system for "distributed device diagnostics" and demonstrated it using a PC as the device to be diagnosed. The AR was shown in video-overlay mode, where the live video was merged with a 3D CAD overlay.

These images show how in an indoor scenario tracking can be performed by a simple set of

custom-defined fiducial markers. This circular marker pattern proved to be very reliably detectable

in a cluttered environment such as a typical laboratory.

The pictures show these markers affixed

to a PC with a CAD model overlaid. The visual tracking could be achieved on a 200 MHz PC at a 10 fps.

To see larger versions of these images, simply click on them.

At the Rockwell Automation Fair 2003, our team demonstrated an advanced version of this concept. Fiducial markers were placed around an operating pump demonstration; a headworn combination of monocular display and camera provided a direct see-through overlay of live pump status information on the complete setup. The markers acted as visual anchors for the information to be placed into the view; the actual point of information placement could be set at an offset from the marker. For more information and for pictures, visit the webpage for this specific demonstration.

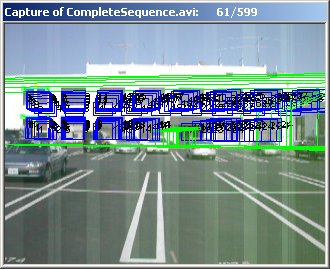

One of the major hurdles in outdoor AR is that the environment often is not cooperative, and that it is not feasible to prepare the infrastructure with fiducial markers. In an urban area, there actually are man-made features that can be used as pseudo-fiducial markers because of their easy recognizability. Such features are, for example, windows of buildings. In a project in collaboration with NRL, I have developed a system that is able to track windows on a building and to obtain user location and attitude, which can be used for a closely registered AR overlay.

![]()

The left image shows how starting from a incorrect assumption,

the overlay converges towards a

registered match. The right image shows the overlay after converting to this match.

In non-urban areas without man-made features, there are only natural features that can be tracked. A very prominent feature - at least in a "structured" hilly terrain - is the horizon silhouette as seen from the user's viewpoint. My goal was to develop a system that is able to match terrain horizon silhouettes with silhouettes created from a Digital Elevation Model (DEM) map. Together with Jun Park, I developed a system for detecting the inflection points of a silhouette. Matching these with a pre-calculated silhouette (from a DEM map) allowed the determination of the attitude relative of the camera (resp. the user) to the silhouette, thus providing registration parameters. Since this method not necessarily provides unique results, a magnetometer was used to obtain rough azimuth for intitialization.

Left: detection of the silhouette and its strongest inflection points.

Right: overlay of the pre-calculated silhouette onto the view of the real world as seen

through a Sony Glasstron® head-worn display.

When I began working in the area of Augmented Reality in 1997 and while researching for references and previous work, I realized that there was not a single conference devoted to this exciting topic - most publications were in related Virtual Reality meetings where AR was one of the "exotic" niche topics. Meeting with researchers David Mizell (at that time working for Boeing, now at Cray Research) and Gudrun Klinker (at that time working for ECRC, now Prof. at TU Munich), we decided to start organizing an annual workshop, devoted solely to the topic Augmented Reality. The first International Workshop on Augmented Reality (IWAR) was held 1998 in San Francisco at the Fairmont hotel, cojoining the UIST conference and chaired by David Mizell. It was a great success. Next year I chaired the 2nd IWAR'99, again in San Francisco. The following years, this event was "upgraded" to a symposium, leading to the acronym ISAR. Fittingly, in 2000 this symposium was held in Munich (the river "Isar" flows through Munich), organized by Gurdun Klinker. In 2001, the attendance at this conference in October in New York - organized by Steve Feiner, Columbia University - was reduced due to the terrible events on 9/11, when people hesitated to travel by air. But the following year, attendance was up again, when the symposium merged with the binannually held ISMR that had been organized in 1999 and 2001 in Yokohama. The 2002 Int. Symposium on Mixed and Augmented Reality was held in Darmstadt, organized by Didier Stricker and the Fraunhofer Institute. In 2003, ISMAR took place in Tokyo, and in 2004, ISMAR took place in Washington DC.