Stereography with Lightwave 3D

page 2

More Trouble

BG image composite with toe-in

There are other problems using toe-in in Lightwave. One you might not expect is that you can no longer use a background image in the composite panel. When using a BG image like this, it is always centered on the camera axis. That means if you toe-in your Lightwave stereo camera, your composited background will appear at the plane of the screen! Therefore, with a camera target you must map your background on a distant polygon. If your lenses are kept parallel, the BG will composite at infinity, where you would expect it to be, and all's well.

|  |

Target centered on Humvee | A better stereo target |

The obvious camera target for good framing and convenient tracking may not be where you want convergence. For example, in the Humvee demo on the LW CD, the camera target is at the center of the vehicle. Now, that may not be a bad compromise for an anaglyph, as it will minimize fringes on the main subject and in some ways make for easier viewing, but to avoid breaking the stereo window I would prefer zero screen parallax in this case to be somewhat in front of the Hummer (especially for a better display method, such as polarized projection). This kind of floating target can be tough to set. Any camera target will cause toe-in--don't use this feature if you want parallel lenses. It is not necessarily a bad thing to animate your camera without targets--setting the camera move independently can result in more interesting, less mechanical, camera moves.

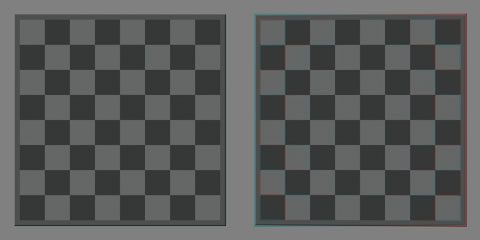

Parabolic distortion with toe-in

It is not generally realized that toe-in distorts the shape of the stereo field. Both the chessboards above were rendered with identical camera settings, except the chessboard on the left was rendered with parallel lenses and the board on the right with toe-in. I have aligned them to the same zero parallax. Notice how the parallel board appears flat, as it should, while the toe-in board is curved.

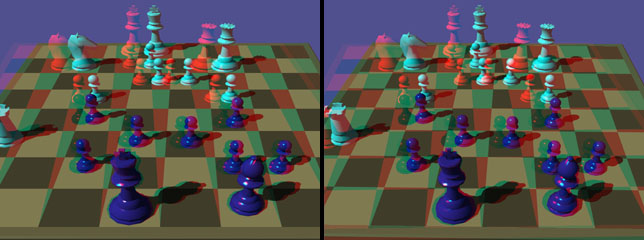

This parabolic distortion is readily apparent in a side-by-side comparison of otherwise identical scenes. The convergence angle on the right stereograph is only about two degrees. Even so, the vertical disparity is at the limit of tolerance (as a rule of thumb, less than one per cent of image height). And can you see the weirdness in the corners as your brain tries to fuse the vertical misalignment? Can you see how the parallel rendering is easier to view? It is my experience that toe-in keystone distortion limits depth before horizontal parallax. That is, you reach intorable eyestrain from vertically twisting the eyes before the images start to split apart from too much camera separation. This is especially a problem with off-screen effects. If you want accurate shapes and maximum stereo effect, you should keep your lenses parallel. Also note how the high-contrast white chess pieces are ghosting pretty badly in the anaglyph, while the lower-contrast board hardly ghosts at all. You should consider this when texturing your objects.

Parallel Vs. Toe-in with twice the camera separtion

Considering all the problems with toe-in stereography, the reader might wonder why the practice is so common. Clearly, toe-in is inspired by an overdrawn analogy between our eyes and a camera. Since our eyes rotate inward, it is assumed a camera should too. But the organ and the device are not identical. Our brain builds a mental construct based on a flying spot scan, projected on the inside of a sphere. The camera captures an indiscriminate view to a flat plane, which a human brain later reinterprets in the context of previous experience. Living stereopsis is immersed in the real three dimensional world, a stereo image is an illusion.

But toe-in also has a practical justification. It is very expensive to design and machine a geometrically correct stereo camera in the physical world. Considering the dominance and ready availability of flattie cameras, it is much easier to adapt conventional equipment to stereo by using toe-in. Even in the above examples, we have seen if the convergence angle and depth range are kept moderate, the distortions are so small that hardly anyone will notice. So, historically, this has been a reasonable, in fact unavoidable, economic trade-off. However, there has been a price in eyestrain, especially considering the human tendency to push everything to the limits and beyond, and this may have contributed to the many failures of stereoscopic cinema.

But in the virtual world, this economy is harder to rationalize. Here, only a small amount of one-time coding could make eyestrain-free stereo available to millions. It would certainly make life easier to have a good stereo camera in Lightwave, complete with a preview viewfinder that will allow one to set the camera separation and zero parallax plane interactively. And save to various stereo formats; not just anaglyphs. Well, we can wish can't we? One of the best things about Newtek has been its willingness to listen to and respond to the Lightwave users.

Even so, if one is generating Lightwave effects to composite with live-action shot with toe-in, one might be better off matching the original photography, rather than insisting on some ideological purity. I should note, however, that there are physical stereo systems that do not use toe-in; for example, the Stereovision cine lenses, and the IMAX stereo cameras. (IMAX has a unique philosophy of stereography that is beyond the scope of this article.)

Camera separation

Recall that the camera control panel allows setting an "Eye Separation" for Stereoscopic Rendering. Of course, Newtek means "Camera Separation." Sometimes, you will hear this referred to as the "interocular," but if you check your dictionary, you will find that term defined as the distance between eyes. The proper term for the distance between the lenses in a stereo camera is "interaxial." There is a difference, and it is significant in the chain of stereoscopic transmission. Besides, there is no surer way to impress your clients than to precisely use a lot big words they don't understand.

DON'T MAKE THIS MISTAKE!

|  |

Varying the Interaxial | Varying the Interocular |

This of course begs the question of how much one should separate the cameras for a given situation. The amount of screen parallax is a determined, in addition to the interaxial, by the focal length of the lens, the depth range (the distance from the nearest subject to the furthest) and the magnification. The full depth-range equation need not concern us here, we will take a more informal approach. The classic rule of thumb for camera separation is the "1 in 30 rule", that is, the distance between the lenses is one thirtieth of the distance from the camera to the near subject. This is based on the ASA stereo system (i.e., the Stereo Realist camera and its clones), so assumes a 35mm lens, far point at infinity, and small-scale projection. But to accomodate other focal lengths, we will expand this to "Aubrey's rule" (for Steve Aubrey, who told it to me). It is really an abbreviation of the depth-range equation. The constant assumes a 35mm still camera, so you need to set the Film Size option in the Camera Panel to Size 135. We assume a far point of infinity. This is not a perfect rule by any means (see below), but is a useful starting point for determining your interaxial.

AUBREY'S RULE

interaxial=(distance to stereo window)/(focal length x .85)

all dimensions in mm

You can find more information about the depth-range equation in the bibliograpy and links section.Scale

Interestingly, changing the interaxial will alter the perceived scale of the stereo image. This can be estimated proportionatly to the average interocular. That is, if you want to show the point of view of a 60 foot tall giant, you might try an interaxial of 650 mm. The effect will be to miniaturize the scene. Likewise, the point of view of a mouse would require an interaxial of around 10 mm, and everything would appear gigantic. Expanded base images are "hyperstereos," while reduced base images are "hypostereos."

Combining parallax with other stereo cues

Our brains use other cues in addition to stereopsis to reconstruct the depth of a scene, among these are overlapping objects, diminishing sizes and atmospheric haze. Obviously, these perspective cues give us a pretty good sense of depth even in ordinary flat depictions. If we reinforce stereopsis with strong perspective cues, we can get a pretty dramatic 3-D sense with minimal parallax. This will reduce the chance of eyestrain for the audience.

The maximum allowable parallax is a function of basically two factors: the amount of cross-talk in the particular display (i.e., how much one image bleeds through into the other); and the distance of the viewer to the screen (really, the visual angles). Anaglyphs have relatively high cross-talk (we've seen this earlier, but described the effect as "ghosting") and thus can tolerate only relatively small parallax. Good polarized projection or LCD shutter glasses generally do much better and can transmit more parallax. The further the viewer is from the screen, the smaller the visual angle, and so the more screen parallax can be viewed without discomfort. You will have to experiment with your particular system to determine your maximum tolerable screen parallax, but I would suggest about five per cent of your screen width as a "rule of thumb" to start, at least for small displays like TVs and computer monitors. Large screens, as in movie theaters, are a somewhat different situation, beyond the scope of this article.

Previous Page